This paper describes the evolution of the “intentional” model for stakeholder feedback that mitigates risk and improves outcomes. The All of Us Research Program is dedicated to accelerating health research to enable individualized prevention, treatment, and care for all of us. Since the program’s beginning, we have committed to returning research results to our participants, including personalized DNA results. Given the diversity of our participant population and their varied experiences with medical research, we knew returning results would require empathy and diligence. To ensure an inclusive and accessible experience for all participants, we introduced intentional friction by slowing down and soliciting feedback from our participants and other stakeholders.

INTRODUCTION

For those of us who have access to health care, if we get sick—whether it’s as familiar as allergies or as overwhelming as heart disease—most of us will receive the same medical treatment as everyone else with the same diagnosis. This one-size-fits-all approach to health care prioritizes the diagnosis and often ignores many other factors that may have increased our risk for disease in the first place or the factors that may contribute to or inhibit healing. Some of these “factors” include the places in which we live and work, the cultures and habits that inform our lifestyle, and the interactions of our genes with each other and our environments.

In our lifetimes, we’ve witnessed more medical breakthroughs than our parents and grandparents did. So why does personalized health care still elude us? Unlike the current one-size-fits-all approach to health care, personalized medicine—also called precision medicine—is a new approach to improving health, treating disease, and finding cures. It acknowledges that each person is unique—our genes, our environments, our lifestyles, our behaviors—and that the interaction of these factors greatly impacts our health. Precision medicine aims to deliver the right treatment for the right person at the right time, and keep people healthy longer. Ultimately, precision medicine can produce more accurate diagnoses, earlier detection, and better prevention strategies and treatment choices.

One of the challenges to making precision medicine accessible to all is that medical research historically has not included all of us. Consider genomics research. Most of what we understand about genomics (which looks at all of a person’s genes, versus genetics, which looks at specific genes), and the role of genomic factors in health and disease is based on DNA from men of European descent (“Genetics vs. Genomics Fact Sheet” 2018; “Diversity in Genomic Research” 2023). This lack of diversity in genomic research slows down the potential of precision medicine and contributes to health inequities (Popejoy and Fullerton 2016; Sirugo, Williams, and Tishkoff 2019; Wojcik et al. 2019).

Increasing diversity in genomics research means acknowledging and addressing the root causes for the missing diversity. These include (to name a few):

- Enduring mistrust in medical research due to historic events of mistreatment and abuse (Clark et al. 2019; Kraft et al. 2018), such as the Tuskegee syphilis study (“Tuskegee Study and Health Benefit Program” 2023).

- Experiences with discrimination in medical settings (Kraft et al. 2018).

- English-language skills and other cultural barriers (Kraft et al. 2018).

- Transportation and other logistical barriers (Clark et al. 2019).

- Lack of diversity among researchers (Sierra-Mercado and Lazaro-Munoz 2018).

Additionally, it must be acknowledged that individual minority groups are not homogeneous. For example, our colleagues from the Asian Health Coalition note that there are more than 200 subgroups within the Asian American, Hawaiian Native, Pacific Islander (AANHPI) population, reflecting a diversity in cultures and languages. Likewise, more than 60 million Hispanics/Latinos reside in the United States with origins from at least 17 countries (Moslimani, Lopez, and Noe-Bustamante 2023).

About the All of Us Research Program

The All of Us Research Program is on a mission to accelerate health research to enable individualized prevention, treatment, and care for all of us. Part of the National Institutes of Health, All of Us is inviting at least one million U.S. residents who reflect the diversity of the country to enroll in the program and share their medical information for research. This includes sharing a blood or saliva sample for DNA research.

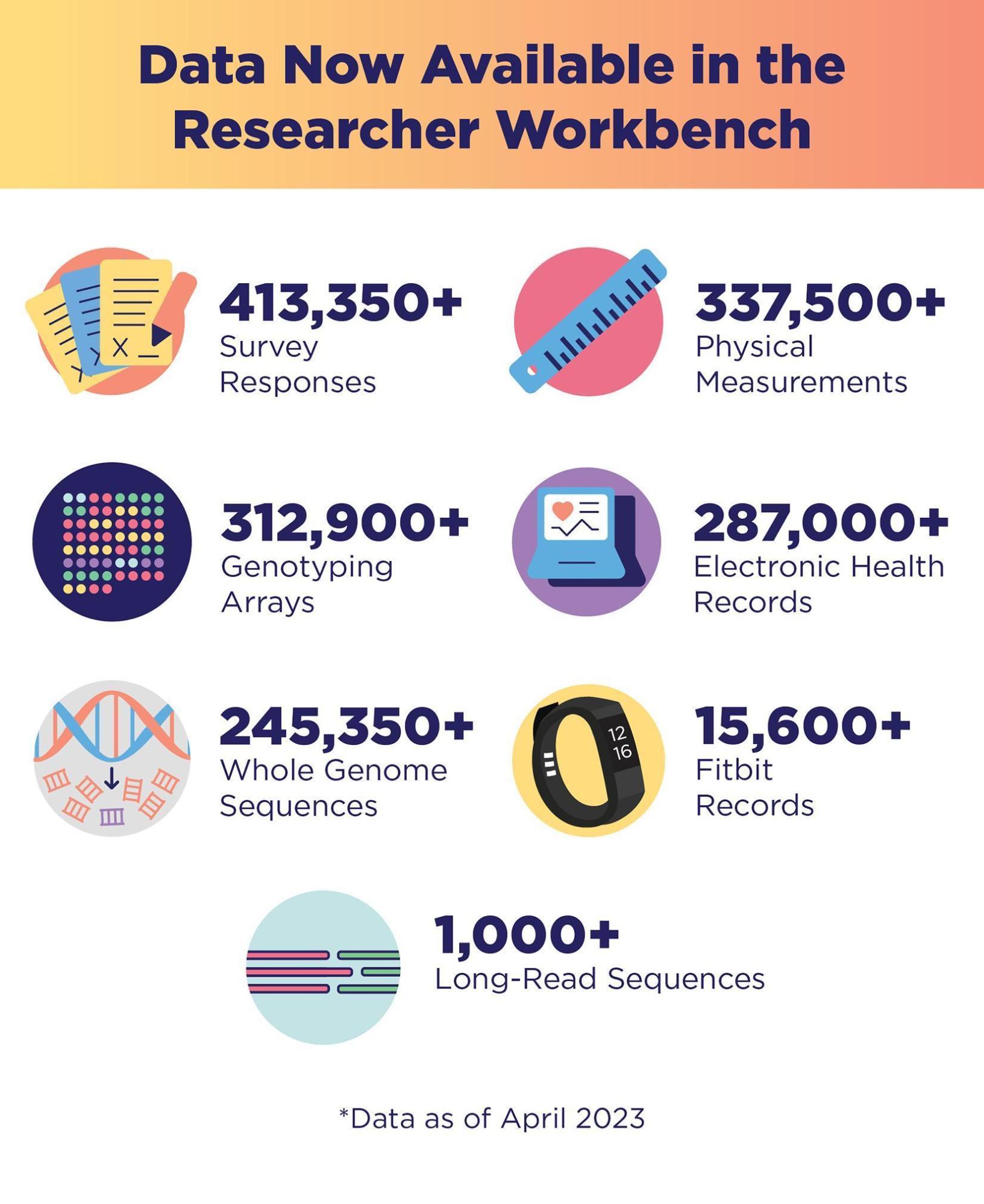

As of September 2023, more than 710,000 people have signed up for All of Us, including 491,000 who have completed all of the program’s initial steps. Of these, 80% identify with a group that has been left out of medical research in the past. This includes people who self-identify as racial and ethnic minorities, as well as many other populations that are considered underrepresented in biomedical research (UBR) because of their self-reported gender identity, sexual orientation, annual income, education, geographic residence, and physical and cognitive disabilities. As of April 2023, data from more than 413,000 participants have been made available for research (“All of Us Research Program Makes Nearly 250000 Whole Genome Sequences Available to Advance Precision Medicine” 2023). This includes whole genome sequences from nearly 250,000 participants (Figure 1). Bringing such diversity to medical research, in general, and genomics research, in particular, is critical for achieving precision medicine for all of us (All of Us Research Program Investigators et al. 2019; Ramirez et al. 2022).

All of Us attributes our successes to our core values (“Core Values” 2021):

- Participation is open to all.

- Participants reflect the rich diversity of the United States.

- Participants are partners.

- Transparency earns trust.

- Participants have access to their information.

- Data are broadly accessible for research purposes.

- Security and privacy are of highest importance (“Precision Medicine Initiative: Privacy and Trust Principles” 2022; “Precision Medicine Initiative: Data Security Policy Principles and Framework Overview” 2022).

- The program will be a catalyst for positive change in research.

Our core values have also helped redefine the traditional relationship between researcher and study participant by putting the participant first. Key among these are the program’s commitment to core values 3, 4, 5, and 8. All of Us has prioritized giving our participants and other stakeholders a voice in the development of the program to help gain their trust and design experiences that are accessible and inclusive to all. In the five years since All of Us launched in 2018, there have been many opportunities for our stakeholders, including participants, frontline staff, and community partners, to give feedback and help create the participant experiences.

Stakeholder Engagement in the Return of DNA Results

Additionally, advances in whole genome sequencing techniques have prompted an important debate in genomics research: Is it time to return health-related genomic information back to study participants, and if so, what information is ethically responsible to return (Wolf 2012)? For All of Us, this debate was settled early. We have been committed since the program’s beginning to return research results to participants for free, including personalized DNA results. This includes two health-related DNA results.

- Hereditary Disease Risk: whether they have a higher risk for certain inherited health conditions (“Hereditary Disease Risk | Join All of Us”, n.d.).

- Medicine and Your DNA: how their bodies might react to certain medications (“Medicine and Your DNA | Join All of Us”, n.d.).

While we knew that offering health-related DNA results would motivate many participants to join All of Us, we also knew that returning these DNA results would require utmost care and responsibility for several reasons. Some these included:

- Enduring mistrust in medical research and in government due to historic mistreatment may create apprehension among many participants who identify with an UBR population.

- Our online-first experience can be a burden for those with limited digital literacy and a barrier for those with broadband accessibility issues.

- Limited health or genomics literacy may lead to confusion and anxiety among participants (Schillinger 2020).

- Sharing medically actionable results with participants who may not have access to health care may lead to increased anxiety and fear.

As such, we approached the return of health-related DNA results for participants with empathy and diligence. We looked for opportunities to slow down and create intentional friction to generate traction with our stakeholders and create a better experience overall. We focused our efforts on clearly communicating the risks and benefits of getting health-related DNA results, designing an accessible and easy-to-use participant experience, providing plain language educational materials about DNA and genomics, and including free genetic counseling to ensure participants could talk to a trained counselor about their individual results.

However, this careful approach created new challenges for the project team. Setting up the infrastructure to responsibly return health-related DNA results took longer than anticipated, which elicited questions and frustration from some participants who were eager to get their health-related information sooner. To preserve participants’ trust, we strengthened our commitment to involving stakeholders throughout the process to return health-related DNA results.

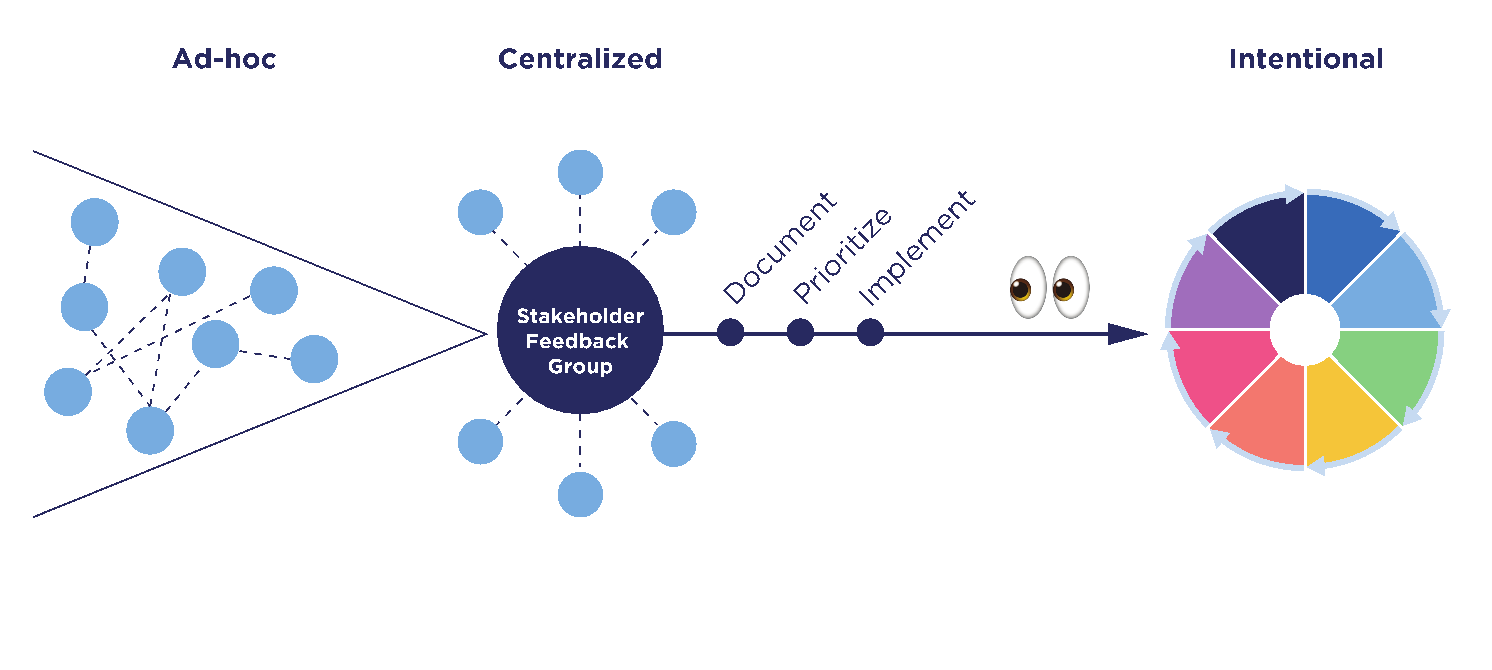

In this paper, we’ll describe the evolution of our approach to engage our participants and other stakeholders to share feedback with us. This evolution had three phases, beginning with an “ad-hoc” phase, then adopting a “centralized” approach, and later envisioning an “intentional” model. During the first “ad-hoc” phase, there was no central organization. Different teams were requesting stakeholder feedback using different methods and sharing the feedback they gathered in different ways. This is historically how we captured stakeholder feedback, so it was not “wrong” per se. It just was not as “right” as it could be, given the sensitive nature of DNA results. As we got closer to launching the return of health-related DNA results, we realized we needed better organization. So, in the second phase, we created an official Stakeholder Feedback Working Group. This group included representatives from various All of Us divisions, including, Medical and Scientific Research, Communications, User Experience (UX), Engagement and Outreach, Product, Policy, and others. During this “centralized” phase, the Stakeholder Feedback Working Group established a process to better document, prioritize, and implement feedback. After launching health-related DNA results to participants, we reflected on our process and the lessons learned along the way. Based on that reflection, we proposed a more intentional stakeholder feedback model that we intend to adopt going forward.

WHAT’S AT STAKE IF WE DON’T IMPROVE OUR PROCESS

Because of our commitment to enroll UBR populations, many of whom are justifiably mistrustful due to historic events of mistreatment in medical research, any effort to engage our stakeholders inherently risks generating more mistrust instead of rebuilding trust. That risk increases if stakeholder engagement is approached without sufficient rigor and intention. This is especially true in the context of returning health-related DNA results to participants but is relevant across every participant touchpoint the program develops. We might compromise participants’ trust in and commitment to the program if we do not approach stakeholder feedback with careful planning and intention. For example:

- If we are not clear to stakeholders about what we are doing with their feedback, it could appear that we are just going through the motions and giving the impression of valuing their input while not acting on it.

- If we present work that does not incorporate feedback we previously collected from stakeholders, they may think we did not listen to them and may feel disrespected.

- By demonstrating a lack of coordination in our feedback collection processes, we may cause stakeholders to question our professionalism and our capabilities in general, including the ability to safeguard their data effectively.

These are not merely hypothetical outcomes but real responses that we heard from some of the stakeholders whom we engaged during our initial ad-hoc approach. If through this process we disengage these stakeholders for any reason—whether it’s increased mistrust, perceived disrespect, or perceived ineptitude—we will not be able to achieve our goal of precision medicine for everyone, which is dependent on diverse representation in our dataset. To avoid this potential disappointing outcome, we aspired to learn from our mistakes and envisioned this model to approach stakeholder feedback with rigor and intention.

HOW WE DID IT

The “Ad-Hoc” Phase

Returning health-related DNA results to our participants was a complex, multi-year process. Success required meticulous coordination and teamwork across multiple divisions within All of Us and with our technology and scientific partners. The participant-facing experience included a coordinated digital communications campaign and digital user experience designed to meet several requirements from both the Food and Drug Administration and our independent research ethics board. Some of these requirements included:

- Explaining all the benefits and risks of getting health-related DNA results upfront.

- Letting participants decide if they want their health-related DNA results.

- Notifying and providing participants access to their results when ready.

- Providing participants with access to a genetic counseling resource and supporting educational materials.

We were committed to including participants, our primary stakeholder, and participant representatives in creating every facet of the experience. All of Us has several participant advisory boards we were able to engage for feedback. We also used usability testing platforms to reach proxy participants—adults not enrolled in All of Us who fit the demographic profile of certain UBR populations. In addition, we engaged the staff at several of our health care provider organizations and community partner groups, which play a key role in recruiting and engaging with participants. We also recruited subject matter experts from several organizations, including the American Association on Health and Disability, Asian Health Coalition, Essentia Health, FiftyForward, Jackson-Hinds Comprehensive Health Center, National Alliance for Hispanic Health, and PRIDEnet, plus All of Us colleagues with expertise in American Indian/Alaska Native engagement efforts and ethical, legal, and social implications of genomic research, to advise us on potential culturally sensitive issues with the content of the DNA reports.

Efforts were made by various divisions and cross-disciplinary teams to solicit feedback from these stakeholders through multiple methods. These methods included: webinars, surveys, focus groups, listening sessions, comprehension testing, content reviews, usability testing, forming a Cultural Awareness Committee, and a friends and family soft launch.

While well intentioned, this ad-hoc approach applied by various divisions created challenges for the project team attempting to respond to and implement the feedback. Since we had not intentionally designed these points of friction, they were signals to us that we needed to improve our stakeholder feedback process.

REFLECTING ON LESSONS LEARNED TO IMPROVE OUR APPROACH

The “Centralization” Phase

After most feedback had been collected and some had already been addressed, a cross-disciplinary team, called the Stakeholder Feedback Working Group, convened to take stock of the feedback that had been gathered. This group included representatives from various All of Us divisions, including Medical and Scientific Research, Communications, UX, Engagement and Outreach, Product, Policy, and others. At this time, the Working Group recognized our commitment to gathering stakeholder feedback had resulted in an unexpected challenge: We had received a lot of feedback, and we could not implement all the feedback in time for our launch. To address this challenge, we established a process to better document, organize, and prioritize the feedback. We call this our “centralization” phase.

Centralization of our feedback process proved effective for several reasons and is evidence of the value of creating intentional friction, that is, slowing down to speed up. Feedback was documented centrally, prioritized using an objective scoring model, and implemented in a more organized fashion. By documenting all the feedback collected by various divisions in one place—in our case a single spreadsheet—and organizing it so the feedback could be sorted and filtered, all teams engaging stakeholders could see what feedback had already been gathered. This prevented us from making duplicative feedback requests, which reduced the burden on our stakeholders. This approach made it easier to see patterns quickly, so similar feedback from different stakeholder groups could be flagged for priority attention. Similarly, this approach also allowed us to quickly tag feedback that was out of scope or unactionable, reducing the quantity of feedback that required review and prioritization.

As we continued to gather feedback, the Working Group documented it in our centralized spreadsheet and met regularly to review it. Having all the feedback in one place also made it easier for the Working Group to score the feedback and prioritize the high-scoring feedback for action. This transparency ensured that each division could weigh in on the level of effort it would take their team to implement changes based on the feedback and identify feedback others might not realize would impact their division. It was also during centralization that we realized we needed to identify a “decider” who would ultimately determine how to resolve conflicting feedback from stakeholders and conflicting opinions among members of the Working Group from different divisions. Finally, centralizing documentation of the stakeholder feedback allowed the working group to more easily track progress as feedback was addressed and better organize a backlog of feedback to consider for implementation after we launched the health-related DNA results experience.

In December 2022, All of Us officially began returning health-related DNA results to participants (“NIH’s All of Us Research Program returns genetic health-related results to participants” 2022). Once the launch was behind us, we took some time to reflect on all the efforts undertaken to gather, prioritize, and implement stakeholder feedback during the development of the return of results experience (Figure 2). Even though the “centralized” phase was an improvement over the “ad-hoc” phase, we realized that centralization had not addressed all the problems contributing to the unintentional friction that had been generated by the original ad-hoc approach—there was room for more improvement. As we examined the evolution of our approach, we documented what had worked, where points of friction remained, and what problems might have contributed to those frictions.

Much of the friction that remained was the result of oversights in the process of planning stakeholder feedback engagements, as well as lack of coordination among teams at the outset. One of the most frequent challenges we encountered was managing expectations. Because we did not always set clear expectations with stakeholders at the outset, defining the scope of feedback we were interested in, we ended up with a lot of feedback we could not use, but on which stakeholders still expected action.

It was also only at the end of the process that we realized an oversight in planning had made it difficult to measure our success. This was because we had not collected any baseline metrics against which to test our improvements. Since we were launching a brand-new experience, we would not have been able to gather baseline metrics from a functioning experience, but we could have established more baseline metrics in our earliest stakeholder reviews, against which we could compare the feedback on later iterations that had been improved based on feedback.

Finally, even though centralization allowed us to act on the highest priority feedback more efficiently, many stakeholders were still left unsatisfied because we failed to communicate our decisions and actions back to them. While we thought we were honoring a commitment to design for them by including them in the process, by not closing the loop, we left several stakeholders wondering if we had actually honored this commitment.

After documenting the lessons we learned from our ad-hoc and centralized approaches, we set out to design a more intentional model for stakeholder feedback that would address the gaps in our approach and ease the remaining points of unintentional friction. We hope that by applying this model, future project teams can ensure a smoother process of gathering, documenting, prioritizing, and implementing stakeholder feedback.

A NEW “INTENTIONAL” MODEL FOR STAKEHOLDER FEEDBACK

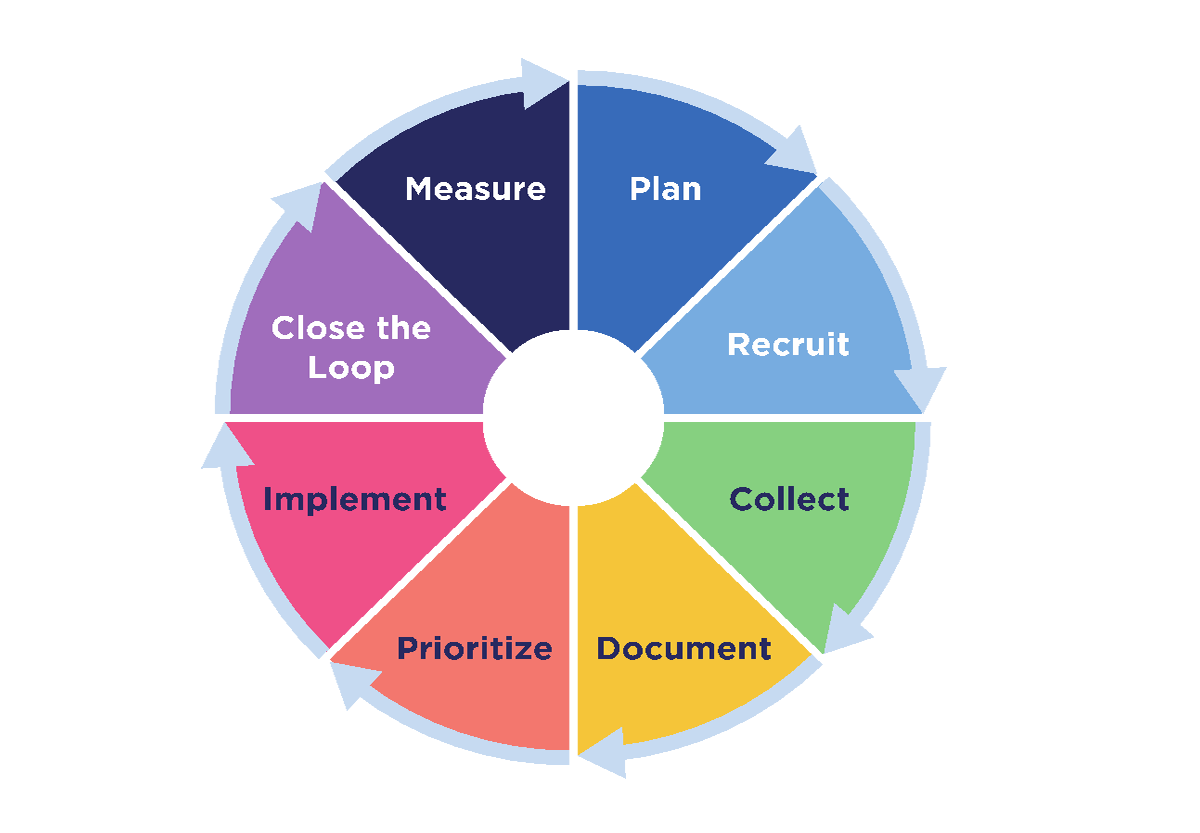

Our working stakeholder feedback model is a continuous cycle of eight distinct phases (Figure 3):

- Plan

- Recruit

- Collect

- Document

- Prioritize

- Implement

- Close the loop

- Measure

For each phase, we recommend several activities that are designed to capitalize on the lessons we learned during our ad-hoc and centralized approaches to stakeholder engagement.

Plan

Thorough planning helps ensure all the other phases go smoothly from feedback topics of interest and methods for gathering feedback, all the way through prioritization and measuring impact. It is important to plan not only what topics or questions will benefit from stakeholder feedback but also the following details:

- Define what success looks like. Success metrics and targets may evolve over the course of the project and will depend on your existing baseline knowledge and metrics. For example, one measure of success we set out to achieve with our health-related DNA results was over 90% comprehension of the personalized DNA results reports we shared with participants.

- Once you have defined your success metrics, document any available baseline knowledge and metrics. You can use these to anchor your targets and more easily identify successful improvements. This information may also inform and guide the questions you ask your stakeholders.

- Identify your audience. Knowing your audience will help you decide which stakeholder groups you want to invite to provide feedback.

- Determine the method(s) you will use to collect the feedback. If little knowledge is available, you may want to start with qualitative methods, such as focus groups and listening sessions. If extensive knowledge exists, you may want to use methods that allow you to ask precise questions or measure specific changes to the user experience, such as surveys, usability testing, or comprehension testing. Consider how you might need to tailor your feedback collection methods to your audiences’ needs as well.

- Select rubrics to help you categorize and prioritize all feedback objectively and consistently. We used a product management prioritization tool called the RICE scoring model. Using this model, the Working Group scored each piece of feedback using four factors: Reach, Impact, Confidence, and Effort (Singarella et al., n.d.).

- Agree on a trade-off process to discuss and resolve conflicting feedback if/when it arises. This includes identifying who the “informers” and “deciders” will be.

Recruit

Identify potential stakeholder groups based on the audience and your defined problems or questions and invite representatives from the stakeholder groups to provide feedback. Also consider the following:

- Precisely define the scope of the feedback you are requesting. (e.g., review only the images as we cannot make changes to the copy at this time).

- Clearly explain expectations (e.g., this is a one-time, virtual focus group that will last two hours, or this is a three-month commitment and may involve five hours of your time each week).

- Determine if you can provide incentives or tokens of appreciation for stakeholders’ time and input.

- Share your plan for when and how you will close the loop after stakeholders provide their feedback.

Collect

Apply the method(s) you will use to capture the feedback. Remember to tailor the feedback-collection methods to the stakeholders and the question. Also consider how you might make the experience as frictionless and flexible for your audience, depending on whether you are using quantitative or qualitative methods. For example:

- For quantitative methods, provide clear instructions and set firm deadlines.

- For qualitative methods,

- Assign a note taker,

- Record the sessions, if possible,

- Create opportunities for stakeholders to share offline, asynchronous feedback. This could be as simple as (1) asking stakeholders to send additional feedback via email or in a separate document, (2) scheduling a one-on-one phone call for those who request it, or (3) providing an online form (or survey) stakeholders can complete.

Document

From the beginning of the stakeholder engagement process, document all feedback, regardless of how minimal or extensive, in a centralized location, such as a spreadsheet. Also, make the feedback accessible to all individuals and teams who will help prioritize and implement feedback. It also is helpful to categorize the feedback as it is received. For example, we categorized the feedback into one of four buckets:

- Launch-blocking: This is feedback we agreed should be prioritized for implementation prior to launch.

- Needs more analysis: This is feedback that we knew would require additional analysis before we could prioritize it. Perhaps it was unclear how many teams would need to be involved in implementing the change or how much time would be required to implement the feedback.

- Duplicate: This category helped us understand how often we received similar feedback from different stakeholders.

- Out of scope: We used this category to track feedback that was out of scope for health-related DNA results. For example, it was not uncommon for a stakeholder to share additional feedback about other elements of the program that were tangentially related to the return of health-related DNA results. One example of this type of feedback was requests for other types of results besides DNA results, such as blood type.

Prioritize

Apply the evaluation rubric selected during the Plan phase to prioritize all the feedback objectively. As noted above, we used the RICE (Reach, Impact, Confidence, Effort) scoring model.

Once we had documented all our stakeholder feedback, it was clear that we would not be able to address all the relevant feedback we received. Using the RICE scoring model helped us objectively decide which feedback to prioritize and implement before we launched, what needed to be implemented but could be implemented after launch, and what feedback we should monitor and revisit after launch. The RICE model also ensured that we considered constraints, such as timelines, and consulted with all parties involved in implementing the feedback to understand the reach, impact, and effort.

Implement

Implement all the prioritized stakeholder feedback and, depending on where you are in your project lifecycle, either test the impact of the changes or launch and prepare to measure your product’s success. The key here is to implement feedback that has been prioritized. It can be tempting to implement non-prioritized, low-effort feedback for the sake of making progress, but this can take time and resources away from the most important work.

A couple lessons we learned during implementation:

- It is important to coordinate and communicate implementation projects across all individuals or teams involved in the overall project. For example, the Communications team developed an email and text message campaign that notified participants about the option to receive personalized DNA results. The team presumed some technical features would be available to use in these digital communications. Only during testing did it become apparent that the functionality was not working as anticipated. On another occasion, the UX team implemented updates to the online participant experience based on findings from usability testing. As a result, the offline instructional materials for participants were incorrect and needed to be updated.

- You can use implementation projects as an opportunity to gather more feedback. We initially launched the return of DNA results to a group of 50 “friends and family” participants to test the experience. Because we had access to these friends and family, we asked them to complete a survey so we could gather more feedback on the effectiveness of the final product.

Close the Loop

Closing the loop is about creating relationships and building trust with stakeholders. In our experience, we found that many stakeholders were very passionate and thoughtful about their feedback and became frustrated when they did not hear back from us.

Report back to the stakeholders who contributed feedback so they know what was or will be implemented. It’s equally important to let them know what was not implemented and why. This phase is also an opportunity to ask stakeholders for suggestions on how to improve the process for future projects.

Closing the loop is a work in progress at All of Us. We continue to investigate how best to standardize the process of closing the loop and reporting back to stakeholders in a more timely fashion.

Measure

To measure is to evaluate the impact of the stakeholder feedback. This may be done by monitoring the analytics you put in place during the Plan phase, doing additional user testing, or comparing new data against the baseline data to check for improvement. As you monitor your analytics, look for new problem areas that may need to be addressed. This will inform your next steps and the cycle can begin again. Engage stakeholders to help address the newly identified problems.

WHERE ARE WE NOW?

Despite the challenges we faced gathering, prioritizing, and implementing stakeholder feedback, there is evidence to suggest that the stakeholder feedback we implemented did have a positive impact on the resulting experience. For example, when our UX team conducted the final comprehension testing of the online DNA results reports, the scores were approximately 97% for the Hereditary Disease Risk report and approximately 98% for the Medicine and Your DNA report. Additionally, we received no “launch-blocking” feedback from the Cultural Awareness Committee or the “friends and family” participants included in our soft launch.

We can also look to data from our Support Center for evidence of success. Between the “friends and family” soft launch in September 2022 and September 2023, our Support Center has fielded 26,223 participant inquiries related to DNA results. Most of these were from participants asking when they would get their DNA results. There were only 1,067 inquiries about genetic counseling and only 110 on how DNA results might impact insurance coverage. Based on our stakeholder feedback, we had anticipated participant questions about genetic counseling and the impact of DNA results on insurance coverage. The Support Center data suggests that the materials available to participants on these topics were easy to understand.

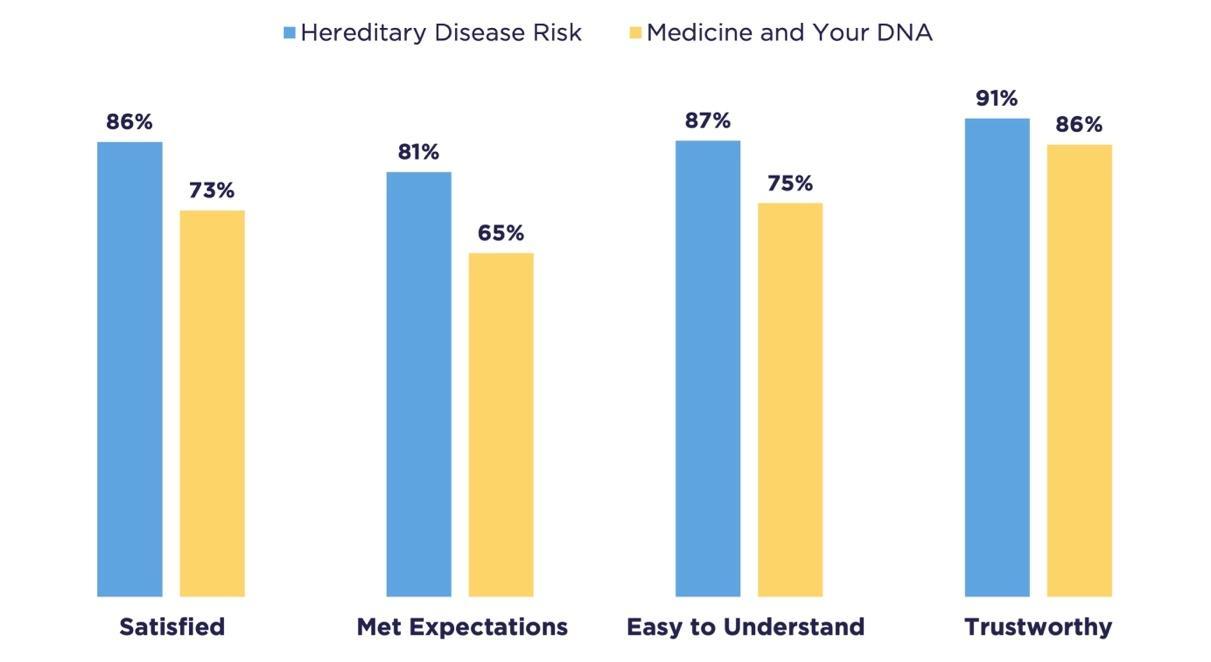

Also, participants who get their health-related DNA results have the choice to complete an anonymous satisfaction survey about their results. These survey results suggest that a majority of participants were satisfied with the content of their results and found them easy to understand, trustworthy, and comprehensive (Figure 4).

These data now serve as baseline metrics against which we can measure the success of future improvements to the experience. We continue to gather participant satisfaction data and monitor it regularly, looking for new signals of unintended friction.

Our next step is to close the loop with our stakeholders by letting them know what feedback we chose to implement and why, so they will feel respected as our partners in the program.

As to the future of the stakeholder feedback model, we anticipate using the model to continue to solicit stakeholder feedback to inform such improvements. We also plan to socialize the model across the various All of Us divisions so other project teams can learn from our experiences. We expect that in the process of socializing the model, we can also gather input from our internal stakeholders to continue to evolve the model. In fact, we can apply the model to this internal stakeholder engagement as a test case.

We recognize that the model is heavily based on personal experience and may also be improved upon by incorporating further research into best practices and other existing models for stakeholder engagement. Similarly, we hope to improve the model by sharing it externally through conferences and other forums where we might gather input from our colleagues.

CONCLUSION

As All of Us enters its sixth year of enrolling participants, the program is in a phase of major growth, scaling, and maturing from its startup phase. This presents an opportune and essential moment to improve our approach to stakeholder feedback by applying and continuing to evolve this model.

For the All of Us Research Program to successfully enroll at least one million participants who reflect the diversity of our country, we will continue to engage with our stakeholders. Their feedback is critical to developing a research experience that participants trust and support. The data and information they share will not only help advance precision medicine research but also give them opportunities to learn about their own health.

If we fail to continually reflect on and improve our stakeholder feedback process, we risk compromising our participants’ trust in All of Us. We hope that by slowing down to speed up—by applying the intentional model to our stakeholder feedback process—we can mitigate that risk and fulfill our mission of accelerating health research to enable individualized prevention, treatment, and care for all of us.

ABOUT THE AUTHORS

Briana Lang, MA, BSJ, joined Wondros in 2017 where she leads UX strategy for the All of Us Research Program’s participant experience, with a focus on enrollment, data collection, and participant satisfaction. She has 18 years of experience in advertising, brand strategy, and UX design at both startups and large agencies. She has a Master of Arts in Design Management from the Savannah College of Art and Design and a Bachelor of Science in Journalism from Northwestern University.

Jennifer Shelley, MMC, MS, is a content strategist with the National Institutes of Health All of Us Research Program. She leverages communications best practices to positively impact the ways that communities can access, understand, and interact with health information. Prior to joining NIH, she worked with cancer researchers at the University of Nebraska Medical Center in Omaha and was an original member of WebMD’s medical news team.

Monica Meyer, MS, MPH, is a biomedical life scientist with Leidos. As a public health professional, she has diverse experience driving organized efforts to manage and prevent disease, promote human and animal health, and inform medical choices for the public, communities, and organizations. She has a Master of Public Health from Wayne State University of Medicine and a Master of Science in Plant Pathology from Michigan State University.

Victoria Palacios, MPH, is a biomedical life scientist with Leidos. She is a dedicated scholar working to better understand the relationship between research, medical innovations, and public health. Her expertise includes project management of clinical research projects, data analysis and interpretation, and the development and implementation of new ideas in research. She has a Master of Public Health from The George Washington University and a Bachelor of Science in Biology from the University of Maryland.

NOTES

The authors would like to acknowledge the other members of the Stakeholder Feedback Working Group: Zeina Attar, Holly DeWolf, Bryanna Ellis, Ryan Hollm, Minnkyong Lee, Sana Mian, Jessica Reusch, and Anastasia Wise. We also would like to thank the many stakeholders who contributed feedback throughout the development of the health-related return of DNA results initiative, especially our participants. Finally, the authors would like to thank our supervisors, Margaret Farrell in the Division of Communications and Leslie Westendorf in the Division of User Experience, for their support of this project. This paper reflects the perspectives of the authors and does not necessarily represent the official views of U.S. Department of Health and Human Services, the National Institutes of Health, or the All of Us Research Program.

REFERENCES CITED

All of Us Research Program Investigators; Josh C. Denny, Joni L. Rutter, David B. Goldstein, Anthony Philippakis, Jordan W. Smoller, Gwynne Jenkins, and Eric Dishman. 2019. “The All of Us Research Program.” New England Journal of Medicine 381, no. 7 (August): 668-676. 10.1056/NEJMsr1809937.

“All of Us Research Program Makes Nearly 250000 Whole Genome Sequences Available to Advance Precision Medicine.” 2023. All of Us Research Program. https://allofus.nih.gov/news-events/announcements/all-us-research-program-makes-nearly-250000-whole-genome-sequences-available-advance-precision-medicine.

Clark, Luther T., Laurence Watkins, Ileana L. Pina, Mary Elmer, Ola Akinboboye, Millicent Gorham, Brenda Jamerson, et al. 2019. “Increasing Diversity in Clinical Trials: Overcoming Critical Barriers.” Current Problems in Cardiology 44, no. 5 (May): 148-172. 10.1016/j.cpcardiol.2018.11.002.

“Core Values.” 2021. All of Us Research Program. https://allofus.nih.gov/about/core-values.

“Diversity in Genomic Research.” 2023. National Human Genome Research Institute. https://www.genome.gov/about-genomics/fact-sheets/Diversity-in-Genomic-Research.

“Genetics vs. Genomics Fact Sheet.” 2018. National Human Genome Research Institute. https://www.genome.gov/about-genomics/fact-sheets/Genetics-vs-Genomics.

“Hereditary Disease Risk | Join All of Us.” n.d. JoinAllofUs.org. Accessed September 24, 2023. https://allof-us.org/hereditary-disease-risk.

Kraft, Stephanie A., Mildred K. Cho, Katherine Gillespie, Meghan Halley, Nina Varsava, Kelly E. Ormond, Harold S. Luft, Benjamin S. Wilfond, and Sandra Soo-Jin Lee. 2018. “Beyond Consent: Building Trusting Relationships With Diverse Populations in Precision Medicine Research.” The American Journal of Bioethics 18 (4): 3-20. 10.1080/15265161.2018.1431322.

“Medicine and Your DNA | Join All of Us.” n.d. JoinAllofUs.org. Accessed September 24, 2023. https://allof-us.org/med-and-your-dna.

Moslimani, Mohamad, Mark H. Lopez, and Luis Noe-Bustamante. 2023. “11 facts about Hispanic origin groups in the U.S.” Pew Research Center. https://www.pewresearch.org/short-reads/2023/08/16/11-facts-about-hispanic-origin-groups-in-the-us/.

“NIH’s All of Us Research Program returns genetic health-related results to participants.” 2022. All of Us Research Program. https://allofus.nih.gov/news-events/announcements/nihs-all-us-research-program-returns-genetic-health-related-results-participants.

Popejoy, Alice B., and Stephanie M. Fullerton. 2016. “Genomics is failing on diversity.” Nature 538, no. 7624 (October): 161-164. https://doi.org/10.1038%2F538161a.

“Precision Medicine.” 2018. U.S. Food and Drug Administration. https://www.fda.gov/medical-devices/in-vitro-diagnostics/precision-medicine.

“Precision Medicine Initiative: Data Security Policy Principles and Framework Overview.” 2022. All of Us Research Program. https://allofus.nih.gov/protecting-data-and-privacy/precision-medicine-initiative-data-security-policy-principles-and-framework-overview.

“Precision Medicine Initiative: Privacy and Trust Principles.” 2022. All of Us Research Program. https://allofus.nih.gov/protecting-data-and-privacy/precision-medicine-initiative-privacy-and-trust-principles.

Ramirez, Andrea H., Lina Sulieman, David J. Schlueter, Alese Halvorson, Jun Qian, Francis Ratsimbazafy, Roxana Loperena, et al. 2022. “The All of Us Research Program: Data quality, utility, and diversity.” Patterns (N.Y.) 3, no. 8 (August): 100570. 10.1016/j.patter.2022.100570.

Schillinger, Dean. 2020. “The Intersections Between Social Determinants of Health, Health Literacy, and Health Disparities.” Studies in health technology and informatics 269 (June): 22-41. 10.3233/SHTI200020.

Sierra-Mercado, Demetrio, and Gabriel Lazaro-Munoz. 2018. “Enhance Diversity Among Researchers to Promote Participant Trust in Precision Medicine Research.” The American Journal of Bioethics 18 (4): 44-46. 10.1080/15265161.2018.1431323.

Singarella, Olivia, Declan Ivory, Des Traynor, Liam Geraghty, Nadine Mansour, Oran O’Dowd, Beth McEntee, and Sean McBride. n.d. “RICE Prioritization Framework for Product Managers [+Examples].” Intercom. Accessed October 3, 2023. https://www.intercom.com/blog/rice-simple-prioritization-for-product-managers/.

Sirugo, Giorgio, Scott M. Williams, and Sarah A. Tishkoff. 2019. “The Missing Diversity in Human Genetic Studies.” Cell 177, no. 1 (March): 26-31. 10.1016/j.cell.2019.02.048.

“Tuskegee Study and Health Benefit Program.” 2023. Centers for Disease Control and Prevention. http://www.cdc.gov/tuskegee/index.html.

Wojcik, Genevieve L., Mariaelisa Graff, Katherine K. Nishimura, Ran Tao, Jeffrey Haessler, Christopher R. Gignoux, Heather M. Highland, et al. 2019. “Genetic analyses of diverse populations improves discovery for complex traits.” Nature 570 (June): 514-518. 10.1038/s41586-019-1310-4.

Wolf, Susan M. 2012. “The Past, Present & Future of the Debate Over Return of Research Results & Incidental Findings.” Genetic Medicine 14, no. 4 (April): 355-357. 10.1038/gim.2012.26.