In this never before shared case study, we explain how our UX research team increased its scope of work from surface-level UI issues to a full portfolio of user-centered research. We use organizational ethnography and organizational change literature to develop a three phase model of research team growth. We then discuss the implications of our model for strengthening ethnographic praxis in cultures dominated by usability engineering. We conclude with a reflection on building internal bridges to facilitate change. Keywords: organizational change, UI, UX, research, ethnography, usability, lean.

“It is by logic that we prove, but by intuition that we discover.” (Poincaré 1914: 129)

INTRODUCTION: EXPANDING THE SCOPE OF RESEARCH FROM UI TO UX

The skill segmentation deck went viral. Huh? Even we were surprised by the diversity of teams consuming the report. Unlike research reports of recommendations for a specific feature, which often read as gibberish or truisms to project outsiders, this content answered a myriad of questions that teams had debated for years. Product managers adopted the language of the user segmentation overnight. Scrum teams reprioritized their work to align features to their new understanding of users, asking, “Is this feature suitable for each segment?” Executives demanded more of the same, asking for similar research to be conducted for each product line. Our little side-project had done its job, moving us one step closer to earning research a seat at the strategy table. But before the fanfare, we worked for months to build the organizational readiness to hear and act upon the insights from the report.

Organizational Context: Product Development at Salesforce

When we joined Salesforce, most product managers (PMs) understood research to mean “usability testing.” PMs at Salesforce are subject matter experts, and are commonly described as “owners” of products or features, linguistically implying their decision making authority. PMs are usually staffed to a particular feature for years, unlike user experience (UX) researchers who are more commonly staffed to a feature for a weeks or months. This staffing dynamic understandably made it abnormal for PMs to seek out UX researchers for insights on user behaviors or industry trends. Unlike other models of product decision making where PMs, engineers, and researchers are all required to agree before moving forward, at Salesforce product related decision making historically fell squarely on the shoulders of product managers.

Compounding that challenge of UX researchers (and UX practitioners in general) having less domain knowledge that their PM counterparts, and consequently being left out of conversations about strategy and road maps, is a culture of aggressive product development schedules. Salesforce commonly releases multiple breakthrough products at each Dreamforce, the company’s annual convention and product release showcase. Prior to our user segmentation, we—researchers and stakeholders alike—were perpetually swept up in the sprint to meet Dreamforce deadlines, not often enough pausing to ask ourselves, “Are we building the right products for the right people?” The focus of investigation was more commonly “Can we get these working in time for Dreamforce?” which manifested in a deluge of requests to UX researchers for usability testing. While usability testing is an important component of a research portfolio, the collapsing of research into one method concerned us—and many of our fellow researchers.

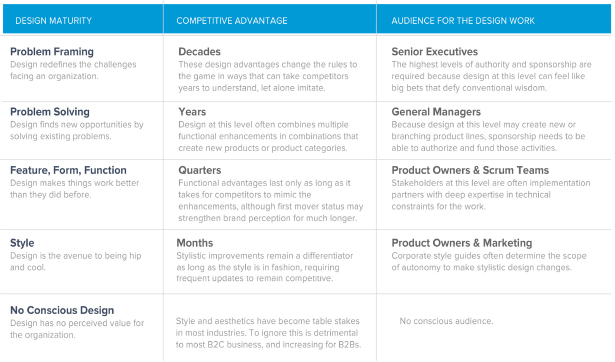

Today, things are improving: while usability still dominates research efforts, we are slowly but surely expanding into other types of work. To describe the cultural shift we are working towards on our team, we find Wu and McMullin’s design maturity stages useful (Figure 1). There is naturally variation from team to team, but the UX team historically has been engaged and valued for contributions at the level of Feature, Form, and Function. However, as a UX team, we aspire to also contribute at the level of Problem Framing, where both design and research contribute to defining the broader challenges facing our organization.

Figure 1. Design Maturity Stages. Adapted from Ambidextrous Magazine, 2006-2, and Jan Schmiedgen, 2013.

Each stage of design maturity is valuable and can be conducted strategically. While the model’s authors describe the “style” stage in a superficial light, we argue that it is equally as important as the other stages and provides equal opportunity for strategic contribution. For example, style guides like Bootstrap and Salesforce’s recently released Lightning style guide enable a visual and functional consistency that greatly benefit end users and empower third party developers who may not otherwise have access to design guidance. Our goal was never to ignore “lower” stages of maturity, but to enable strategic contributions at each level.

Based on our insights from academic literature, the industry context, and our own ethnography of UX research culture change at Salesforce, we develop a model of organizational change for UX research teams that shows the path from fixing bugs to building businesses. We use “fixing bugs” to describe the mindset that the job of research is to identify and diagnose usability issues. We use “building businesses” to describe the mindset that a balanced portfolio of research also includes initiatives for de-risking new product development and co-creating entirely new lines of business with product development teams. Our model has three stages: excavation, foundation, and construction. We illustrate the stages of our model with ethnographic examples of projects from our own research team, both successes and mishaps.

Fixing Bugs: The Job of Research Is to Diagnose Usability Issues

In the beginning of our company and many others, the role of UX research was widely considered to be fixing bugs—that is, identifying and diagnosing usability issues. Most researchers had backgrounds in engineering psychology or human-computer interaction, and devoted their careers and skill development plans to understanding the fine details as well as the big picture of usability and ease-of-use concerns, for both software and hardware. Groups such as BayCHI, which is the San Francisco Bay Area chapter of the ACM Special Interest Group on Computer-Human Interaction (SIGCHI), and books such as Measuring the User Experience (Tullis and Albert 2013) exemplify this type of work. In this mindset, excellence is defined at the level of form, feature, and function. Further, excellence is easily quantifiable and measurable. This manifests as optimizing flows for end users, increasing conversion rates, and the like. There is little reference to redefining the user’s problem or the approaches being used to solve that particular problem. The emphasis is on improving the metrics is not contextualized by the problem, as currently defined. In this mindset, product managers make statements such as, “We think this change will help users accomplish X more quickly.” It is then the role of the researcher to define the study and to test that hypothesis. Excellent research quickly provides feedback to the product manager on whether their intervention helped move the needle of user experience in the desired direction.

The fixing bugs mindset is useful for optimizing implementations. When the product and market are well-defined, users’ expectations are well-understood, and technical constraints are well-understood, research aimed at optimizing a UI is the fine-tuned craftsmanship that makes things better.

Yet this optimization mindset falls short when applied to undeveloped products, or new markets, or users whose expectations are unclear, or unknown technical constraints. For that, a broader mindset is needed. The broader mindset includes problem framing skills, subject matter expertise, a wide toolkit of research methods, and empowerment on the part of individual researchers and the research team as a whole.

Sometimes this broader mindset means researchers advocate for no research at all on a specific problem. For example, in our work, we observed a product manager asking, “Should the lock button be on the screen or not?” The researcher who had conducted prior related studies on the target user segment, said, “Well, experienced users look for it, and are anxious if it is not there. And it teaches inexperienced users a good behavior. So yes, it should be there.” The room agreed. In this example, no study was needed. A/B testing a version with the lock button alongside a version without the lock button would have been a waste of resources. By including researchers with problem framing skills and subject matter expertise earlier on in the process, we avoid the time and expense of unnecessary usability studies.

We believe research has a greater impact when researchers are involved in the problem framing with product managers from the beginning. Researchers and product managers can create better hypotheses faster when they work together. That is a hallmark of the building businesses mindset. But before we can get there, we must proceed through three stages of change: excavation, foundation, and construction.

Building Businesses: The Job of Research Also Includes Co-Creating New Lines of Business

We have not yet reached our end goal. We seek a state in which the work of our research team spans all the design maturity stages, so that UX excellence is reflected in both UI nuances and in shaping product-market fit for new lines of business. We seek a state in which truth is triangulated through mixed methods studies, including a broad array of qualitative and quantitative analysis techniques. In sum, we seek a state in which product development stakeholders, from product managers to the director of product, see UX researchers as strategic partners.

In this stage, researchers will lend rigor to evaluating and documenting opportunity spaces—a role that was previously exclusively the domain of product managers. Further, researchers and product managers alike will use lean startup methods to test value hypotheses and iterate on them before platforms are extended into multiple languages and archaic browsers. In other words: we will answer “Is this a good idea?” before devoting large amounts of resources to optimizing for ease of use.

HOW DOES ORGANIZATIONAL CHANGE HAPPEN? ACADEMIC AND PRACTITIONER PERSPECTIVES

Before moving on to our model, we address the broader question of how organizational change happens. In this section, we draw on both academic and practitioner perspectives of organizational change. In terms of academic literature, we contrast Armenakis and Bedeian’s (1999) framework of content, context, process, and criterion issues with Tsoukas and Chia’s (2002) argument that organizational becoming requires addressing values and beliefs. We use this contrast to build a political map of stakeholders for organizational change in the UX Research team. In terms of industry best practices, we discuss success stories in our industry. We build out our mile markers for organizational change based in part on models developed by management consulting firms Jump Associates and SecondRoad. The political map and the mile markers together form the foundation for our model of organizational change at Salesforce.

Academic Perspectives: How Values and Beliefs Interact with Content, Context, Process, and Criterion Issues to Create and Sustain Change

Organizational change is a broad topic. To organize the mass of academic literature on the subject, Armenakis and Bedeian (1999) reviewed influential studies from 1990-1999 and uncovered four key themes: content, context, process, and criterion issues. Sixteen years later, their framework still offers useful insights for organizational change in general—and for UX research teams in particular. We dive more into these insights below, then contrast them with Tsoukas and Chia’s (2002) paper on organizational becoming, a model focused on the key role of values and beliefs in organizational change. We conclude this section with open questions for practitioners derived from the academic literature.

While reviewing academic perspectives on organizational change, we kept the following questions in mind. First, what is change? We were curious about conceptual and operational definitions of organizational change, and about how those definitions moved our understanding of how we might organize UX research teams forward. Second, how does change happen? We wanted to know how, if at all, researchers described temporal and causal patterns common to organizational change across organization sizes, ages, sectors, and industries. Third, what interventions make change more successful? We were interested in how, if at all, particular organizational interventions led to more successful experiences of change from both individual and organizational perspectives.

Armenakis and Bedeian’s (1999) framework of content, context, process, and criterion issues addresses all of these to some degree. They point to a variety of conceptualizations and operationalizations of organizational change in the management literature. More importantly, they use their four themes to illustrate both how change happens and what interventions make change more successful. In their paper, content issues refer to the substance of the desired change within the bounds of an organization. For example, changes in organizational structure and in performance evaluation processes count as content issues. Context issues refer to how environmental factors influence the substance of an organizational change. For example, changing FDA regulations regarding requirements for clinical trials affect the substance of an organizational change in a biotech firm that is bringing new drugs to market. Process issues refer not to organizational processes but to how organizational change happens over time. A timeline of the transformation of Apple from 1976 to present would illustrate process issues. It may make more sense to call these phases of change. In Armenakis and Bedeian’s paper, the process issues section reviews published models of how organizational change happens in an attempt to synthesize them into a broader model of organizational change. Finally, criterion issues refer to outcome variables. In other words: how do we know if organizational change is successful? Traditionally, success has been measured by bottom line metrics such as profitability and market share. But Armenakis and Bedeian point out that individual metrics such as commitment to an organization and job-related stress also matter. So we may summarize their contribution to the literature as follows: when discussing organizational change, describe the substance of the change (content), the environment in which the change occurs (context), the shape of the change over time (process), and the success metrics for the change (criterion).

Armenakis and Bedeian’s paper helps us think about organizational change for UX research cultures as follows. First, it is a useful reminder that the substance of the change we are trying to make—say, from usability to ethnographic praxis, or from no UX presence to earning UX a seat at the strategy table—is only a small part of organizational change. If we focus on substance to the exclusion of context, process, and criterion issues, we will fail. Second, the paper reminds us that small and large organizations alike have successfully navigated contextual pressures—so “our firm is too large to really change” is not an excuse. The transformation of Intuit is a case in point. Third, organizational change takes time. While longitudinal, multi-decade studies of organizational change are lacking in the literature, it would do us well to remember that any of our change projects will take several years and involve ups and downs. For example, Armenakis and Bedeian point to a four-stage process of change developed by Jaffe, Scott, and Tobe (1994):

Denial occurs as employees refuse to believe that a change is necessary or that it will be implemented. This is followed by resistance, as evidenced by individuals withholding participation, attempting to postpone implementation, and endeavoring to convince decision makers that the proposed change is inappropriate. Exploration is marked by experimentation with new behaviors as a test of their effectiveness in achieving promised results. And, finally, commitment takes place as change target members embrace a proposed change. (Armenakis and Bedeian 1999: 303)

While it may not be fun to think about denial and resistance, preparing for those stages will help change agents navigate the inevitable challenges associated with transforming UX research cultures from the inside out.

Finally, Armenakis and Bedeian’s paper reminds us to clarify success metrics for change efforts from the outset. Do we define success in terms of higher headcount for UX, having UX representatives at executive level strategy meetings, measurable impact on the shipped product, some combination of these, or something else entirely? Our definitions of success—and building relationships and shared understandings of those definitions—influence the trajectory of our organizational change efforts.

In contrast to Armenakis and Bedeian’s work on organizational change, Tsoukas and Chia (2002) use the term organizational becoming to reflect the notion that change is constant—humans and their interactions are dynamic—and organizations are consequences of these interactions. In other words, change comes before organization, ontologically speaking. Further, and more importantly for our paper, the process of organizational becoming rests on an internal transformation of values and beliefs. It is much easier to change an organizational chart than it is to change the values and beliefs of employees in an organization.

Both Armenakis and Bedeian and Tsoukas and Chia emphasize the importance of interpersonal relationships and interactions. But they do so in fundamentally different ways. For Armenakis and Bedeian, process models of organizational change include stages that reference the state of relationships between those advocating change (“change agents”) and those resisting change. For Tsoukas and Chia, organizations emerge from a dynamic system of interactions between actors, and so any conversation about an organization without reference to the interactions between members of the organization is nonsensical by default. They define change as “the reweaving of actors’ webs of beliefs and habits of action as a result of new experiences obtained through interactions” (Tsoukas and Chia 2002: 570)—something fundamental to the daily human experience, not reserved for special initiatives designed to turn an organization around or develop new innovative capacities.

In terms of creating a useful model of organizational change for UX research cultures, these two papers offer two key insights. First, relationships matter. If organizations are indeed emergent properties of systems of relationships between actors, then focusing on relationships first makes sense. How to focus on those relationships brings us to our second point: the highest leverage point for change is navigating value and belief conflicts within relationships. For example, in our work at Salesforce, we noticed researchers with usability backgrounds felt self-conscious about not having as much domain knowledge as product managers. They were reluctant to say “I don’t know,” whereas researchers trained in ethnographic methods were thrilled to say “I don’t know,” since that is often the beginning of a fascinating period of learning. It is impossible to build a culture of ethnographic praxis without addressing the fundamental values and beliefs (“Admitting I don’t know makes me look stupid and diminishes the respect the UX team deserves”) at stake, one at a time, in interpersonal interactions. Value and belief conflicts exist in relationships within and across job roles. In another example, we noticed marketing executives saying “we drink our own champagne around here” in reference to testing our own products internally, as opposed to the industry standard term “eating our own dog food.” In this case, our marketing culture emphasized excellence at the expense of celebrating learning: champagne is good by definition, so there is no conceptual or linguistic room to experience and learn from failure. This is in stark contrast to the values and beliefs held by the Lean Startup movement, in which failure is expected and welcomed as a learning opportunity.

Open questions for practitioners, then, include how to discover the values and beliefs of stakeholders as well as how to prioritize interpersonal work on aligning values and beliefs across a diverse set of stakeholders. We address these next.

Practitioner Perspectives: How Design Thinking Cultures Connect with Organizational Change

When lamenting the organizational challenges of building UX teams, we find it helpful to remember that the mainstream burgeoning of the field of design, or UX, is only 15 to 20 years young. Many companies became interested in design thinking in the years following Steve Jobs’ return to Apple. By 1998 the iMac G3 had shipped, kicking off a succession of product launches that led the company from near bankruptcy to world leadership in consumer electronics. Then in 1999, the renowned design agency IDEO was featured on ABC’s Nightline News. By showcasing how IDEO reimaged the experience of grocery shopping and redesigned the shopping cart, the episode introduced design thinking to audiences well beyond practitioners. So, while the disciplines of UX, human-centered design (HCD), and design thinking were far from new, the increasing prominence of these disciplines piqued the curiosity of Fortune 500 companies enough that many of them sought to bring design thinking skills in house. It was in this context that the idea of a design-led company emerged. Despite Apple’s success and example, the value and role of design—and and consequently the role of research in informing design—remains a highly contentious issue.

Because the discipline of UX is still rapidly developing, so too is the language practitioners use to describe our work. Many practitioners use the terms HCI, lean, agile and design-led synonymously, while others bristle at the inconsistencies between those schools of thought and the implied process discrepancies. Even more often, stakeholders with the authority to implement design-led cultures are too busy with other responsibilities to investigate the value of a design-led culture—much less to operationalize a change effort to create one. Recently, Alex Schleifer, Airbnb’s head of design, cautioned that “the point isn’t to create a ‘design-led culture,’ because that tells anyone who isn’t a designer that their insights take a backseat. It puts the entire organization in the position of having to react to one privileged point of view” (Kuang 2015a). Practitioners like Eric Ries and Laura Klein are greatly helping to define the lean approach to product development and the role of design and research within that process.

Not surprisingly, some of the most notable success stories touting the power of design thinking are linked to Ries and Klein. In his 2011 book, The Lean Startup, Ries describes many of the cultural and process changes implemented at tech giant Intuit. Further, the increasing availability of methods and guidebooks like Klein’s 2013 UX for Lean Startups are providing structured guidance to help tech companies embrace UX research in the product development process. Still, even the best guidebooks alone cannot bridge the gap of cultural change.

Google provides an interesting case in point. For years, the culture which had deep roots in engineering and empirical evidence was considered at odds with design. It was only a few months ago that Fast Company proclaimed that Google “finally got design” (Kuang 2015b), describing the company’s transformation from valuing the best design as no design at all to the design-savvy culture that launched the Material Design system. The article describes the importance of executive support for design from founder Larry Page as the tipping point for valuing design sensibility in product development decision making. In the same article, the author references the now infamous anecdote of teams A/B testing 41 shades of blue, scoffing that “it wasn’t a design philosophy as much as a naked fear of screwing up Google’s cash machine.” It was only six years ago, in February of 2009, that Marissa Mayer, then head of UX at Google, was citing that same work of “41 shades” as an exemplary design practice (Holson 2009). As outsiders, it seems nothing less than miraculous that Google made such drastic cultural changes in only six years’ time.

The teams at Google didn’t abandon rigor or engineering excellence. They simply expanded their culture to be inclusive of design values, like consistency and approachability. In Tsoukas and Chia’s terms, Google became a new organization by reweaving its values and beliefs to be more inclusive of both engineering and design.

OUR MODEL IN PRACTICE: ORGANIZATIONAL CHANGE AT SALESFORCE

We illustrate the three stages of our model—excavation, foundation, and construction—with examples from organizational change at Salesforce.

Excavation: Smoothing and Documenting the Lay of the Land

In the excavation stage, research teams use mixed research methods to document the history, culture, processes and contributions of the team. The outcomes of this inquiry provide a documented lay of the land, including shared language and mental models describing what the team is and does. By clearing away the distractions of day-to-day particulars, like specific tools and linguistic styles, the team can align on the values, principles and language they share. This alignment creates a team brand, and a unified message to stakeholders about what the team is and does. From this shared ground, the team also begins to build bridges with stakeholders.

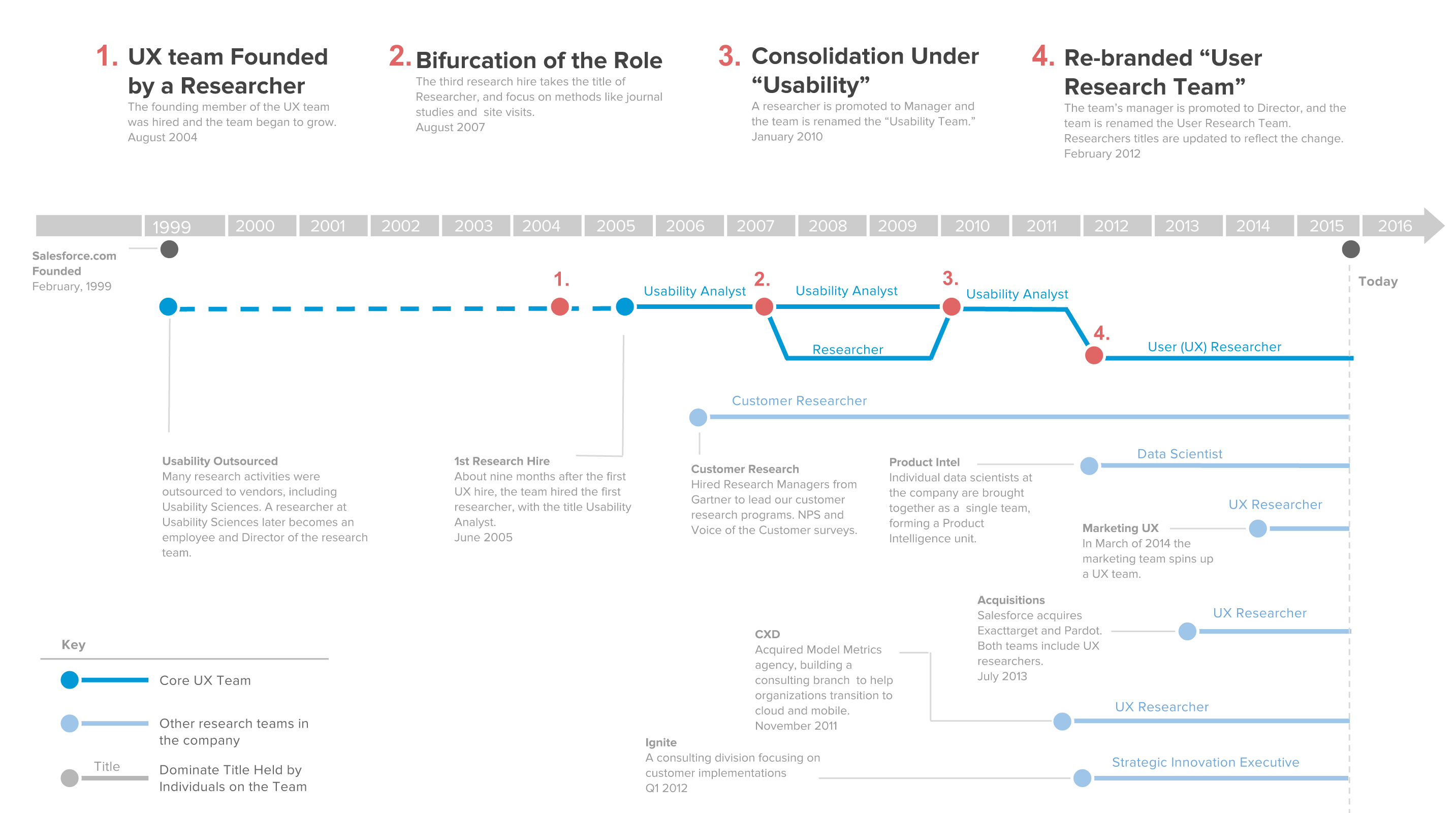

Mapping the Terrain: The History of Research at Salesforce – To begin the process of excavation, we turned the tools of ethnographic praxis on ourselves, investigating the cultural shifts that would be required for stakeholders to trust and value research targeted at higher phases of design maturity.

As a first step, we constructed a history of research at Salesforce (Figure 2) to understand how the responsibilities, skills and conceptual approaches of the research team were shaped as the team grew. Salesforce was founded in 1999. Our founders’ commitment to excellent UX was evident in the UI, and informed by research from the start. In those earliest years, generative research was the responsibility of executives who visited their customers’ offices to observe their products in use, discuss expectations and gather future requirements. During the same period, evaluative research on the products to ensure ease of use was outsourced to trusted vendors.

Figure 2. Evolution of the research team at Salesforce. It’s also heartening to note here the explosion of research practices in the company since 2012, implying high demand for research, despite the ambiguity of the role.

The identity and values of the research team were shaped by four major events. The first occurred in in 2004, when a former usability engineer from Oracle was hired to build a UX team in house. The team began to grow and the first full time researcher was hired within a year. For the next three years, a team of usability analysts continued to build out the evaluative research function at Salesforce. The second major event affecting team identity occurred in 2007, when the research role split into two: one team of usability analysts, focused on evaluative work, and one team of user researchers, focused on generative and formative work. From approximately 2007 to 2010, usability analysts conducted heuristic reviews and usability studies, while user researchers conducted interviews, focus groups, and diary studies. The two roles remained distinct until early in 2010, when the third cultural shift took place. An individual contributor (IC) was promoted to manage the growing team of researchers, which became known as the Usability Team. While not all ICs formally changed their title, the focus of the team was clear: researchers were asked to prioritize conducting heuristic evaluations and usability studies to inform scrum teams’ decisions for the current product release.

Early in 2012, the pendulum swung in the opposite direction. At an executive’s request for the team to provide insights that could inform future releases, the Usability Team was rebranded the User Research Team, and ICs’ titles were updated to reflect the change. This was the fourth major event defining the team within the organization. Unfortunately, while the mandate was clear, the operational expectations for how the team’s day-to-day work would change were not. The organizational change was made before stakeholders reached alignment on how the team should change its work practices to produce new types of insights. For example: Would the team get more headcount to cover this additional work? Should researchers deny work requests from the scrum teams they’d been supporting to prioritize time for future-oriented work? Unresolved tensions about the new mandate for the team led many senior members of the team to leave the team.

Documenting this history reminded stakeholders, including the team, that we are are indeed in a perpetual state of “becoming,” and that this is historic precedent for correcting course to fit the times. It also showed that the team had long been researching both UI ease of use and more abstract user behaviors, clarifying that both types of work are and should be within this team’s domain.

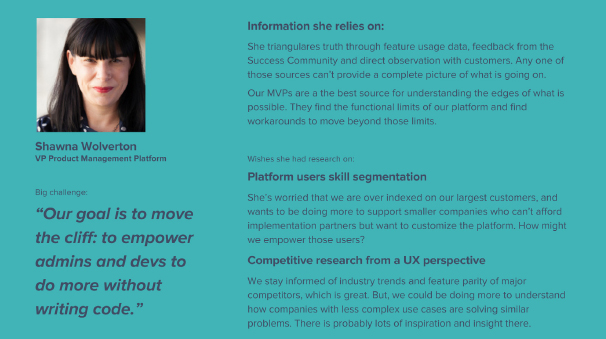

Figure 3. An example of a one page summary of the challenges of a Salesforce executive.

This work helped us identify executives most likely to be allies in culture change, and helped us understand the major concerns of both allies and those who might deny or resist culture change. Such a cultural map is essential for any change effort.

Over and over in our interviews, two themes emerged: usage data and sample sizes. Stakeholders across the board wanted to understand how customers used our product, and they wanted that information from our own usage data—server logs on the behavior of millions of users. Such datasets naturally lend themselves to quantitative analysis on large samples.

Yet usage data in our organization was hard to find for two reasons. First, our service level agreements severely restrict the collection and analysis of customer data, including usage logs. This is one of the many reasons banks and other large firms with sensitive information trust us with their data in the cloud. While these restrictions largely benefit customers, they are at odds with executives’ requests for more insights derived from usage data. Second, in those cases where our service level agreements permit the collection of customer data, instrumenting our product to collect that data is the responsibility of individual scrum teams. When faced with performance expectations to ship more features, scrum teams inevitably choose to allocate developer time to new features over instrumentation for existing features. These circumstances had unknowingly created the impression to executives that the UX research team could not provide the insights they wanted most.

This created a conundrum for the research change-agents: how might we use the methods and data available to us to improve products in a way executives understood and valued? We believed that broadening our stakeholders’ definition of UX research would require our team to demonstrate value at higher stages of design maturity. Again, we turned the tools of research on ourselves to create a shared conceptual model of the team’s process and typical types of contribution.

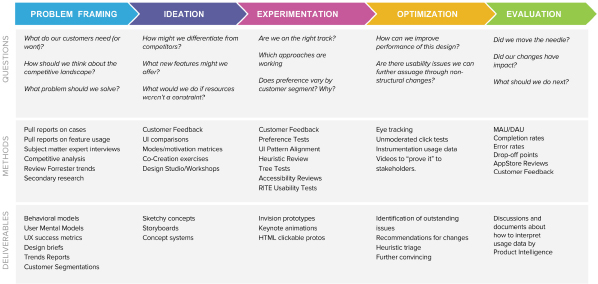

Team Card Sorting: Contextualizing Our Work in Product Development – The next step in the excavation stage was to provide an opportunity for the research team to build shared language and mental models for a full range of research. For example, different researchers often used the same term differently. Additionally, some methods are better suited to different stages in the research process. Further, not all researchers wanted to work in all stages of the Salesforce research process. That is not a problem. It is perfectly normal for researchers to choose to specialize in one stage or another. The challenge is communicating to stakeholders that the team as a whole operates across the stages. Contextualizing these differences and creating a shared meaning for a single term was required in order define the types of research we wanted to do more of in the future.

Several members of the team planned a team offsite to facilitate conversations and exercises to build shared mental models of research. One of the key activities was a research highlight reel. Each researcher put together 4-5 examples of excellent research work, with one example per slide. We printed all of the examples, then, as a team, performed a card sorting exercise to categorize our research work across the product development process. Our map revealed the scope of existing UX research at Salesforce (Figure 4). The analysis of the exercise results included recognizing that the same skills and mindsets that are valued at one stage of a project are counterproductive at other stages. For example, in an ideation mindset, many of the best practices of brainstorming apply, such as withholding judgment and valuing quantity above quality or feasibility. But in an optimization mindset, introducing new ideas is counterproductive and distracting.

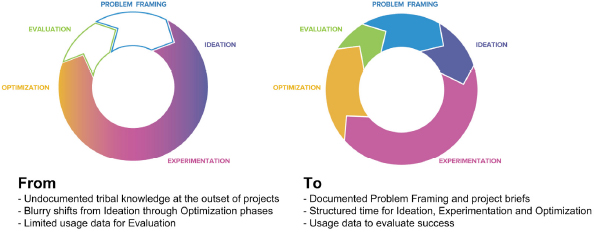

Figure 4. Where UX research fits into product development at Salesforce.

We found that most of our team’s research activities fell into the spectrum from ideation to development and implementation, but that the distinctions between those categories were blurry in the purpose and reach of the work. We found significantly fewer contributions problem framing, which was consistent with our internal assessment of the need to create more generative work. And we found very little work in evaluation, largely due to a lack of usage data. Figure 5 illustrates these gaps.

Figure 5. Gap analysis of UX research vis-à-vis product development at Salesforce.

The research practice we wanted to build included a portfolio of work across the five stages of problem framing, ideation, development, implementation, and evaluation. This would require:

- a shift in thinking about the timing of research in the product development process,

- an illustration of how and why problem framing can help non-research stakeholders,

- structured and distinct time blocks for ideation, development, and implementation, and

- usage data and clear success metrics for evaluation.

In spite of the current gaps and individual preferences, and the research team needed to present a unified view of research to external stakeholders, and doing so would have been impossible without this model.

It was from this point of excavation, with a clear understanding of the history of the team’s identity, a new understanding of expectations from stakeholders, and a shared model of the team’s scope of responsibility and process that we were ready to move to the next phase of our change model to build the foundations to enable long-term success.

Foundation: Proofs of Concept and Expanded Scope

In the foundation stage, research teams create systems to address product teams’ usability needs while opening up time for other kinds of research and introduce the idea of “the right tool for the job” by showing—not telling—stakeholders how researchers can assist with a wide variety of product needs using a broader methods toolkit.

The team’s change agents began the foundation phase by identifying a single project that multiple team members could contribute to that would contribute to product strategy in new ways. In our case, this was the segmentation survey we referenced in the introduction—the same segmentation survey that our colleague Mabel Chan highlighted in her EPIC 2015 blog post (Chan 2015). The survey deliverable was a product of qualitative and quantitative analysis, visual presentation, and collaboration across a wide variety of UX roles. Further, we built the organizational readiness to receive such work by finding an executive to provide early stage feedback on tone and content and promote the work once released. With researchers, designers, product managers, and executives all involved in creating the deliverable, we had achieved significant buy-in across the organization even before shipping the report. That worked: everyone who contributed to the report spread it through their professional networks within the company, and the report went viral. It got the attention of Salesforce executives attention and built credibility for the research team.

We would be lying if we said we didn’t encounter any resistance along the way. As in any company, some stakeholders are quicker to trust than others. In the case of the segmentation survey, we built trust by showing what a multidisciplinary, engaged team can do when all the team members engage their networks.

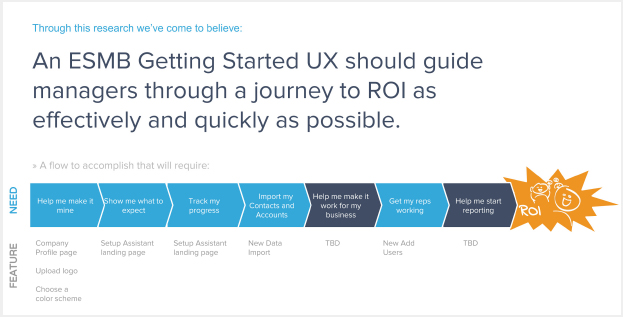

That said, change is possible even with smaller teams and smaller studies. Proof of concept studies go a long way in building stakeholder trust. As just one example, many companies struggle with how to bring new users into a system. In the UX literature regarding onboarding, many articles discuss labels, empty states for forms, and guidelines for helpful text. But research can do more. The problem framing approach makes it part of the researcher’s responsibility to help determine what goals a user should accomplish in the first few interactions with a product, over and above how to efficiently accomplish those goals. At Salesforce, we used to consider people done with onboarding when they had imported their contacts and accounts. But research revealed that people didn’t consider themselves all set up at that point. In fact, users felt finally at home with Salesforce after they had run sales reports from their system. Because of our organizational chart, it was difficult for the onboarding team and the reporting team to work together to find a solution. They had different cost centers, different delivery schedules, and different reporting structures.

Yet we did it. Even though it was uncommon at Salesforce for a researcher to define the sequence of interactions with our product, one of our researchers did it anyway (Figure 6).

Figure 6. Visualization of sequential user goals in a feature.

This visualization provided evidence for the product manager to make the case that reporting needed to be part of his feature—a case in direct conflict with our existing organizational structure. But because the product manager and the researcher came to that conclusion together, and included prior research in their decision, they were able to make a case that made it possible for not only their team but other teams in similar areas to bring user needs together in a productive way.

Construction: Strengthening and Expanding All Types of Research

In the construction stage, research teams build on the foundation of the right tool for the job by creating a full portfolio of research designed to help create and maintain multiple businesses across multiple touch points of the end user experience.

At Salesforce, the major challenge for creating a full portfolio of research was that from 2012-2015, no one owned the research process. We had come together as a research team to illustrate the different stages of research, and the different methods associated with those stages, but we didn’t have an executive advocate to explain the functional role of research and help build bridges with executives. Researchers and their managers had done as much bridge building as they could on their own. Building out a full, robust portfolio of research required a formal hire of a research director. Building the organizational readiness to hire a research director in the first place took two years and hundreds of conversations with stakeholders across the organization.

Our research director started in June 2015. It would not have been possible to hire a research director without the sustained interaction between and among executives, researchers, and managers about what behaviors and values we wanted to emphasize. In other words: it is organizational becoming, in the Tsoukas and Chia sense, in which organizational change is a product of endless interactions between human actors in which values and beliefs change gradually. The process took two years of discussing, documenting and subtly shaping executives’ values regarding research to build alignment about what the skillset and role of the UX Research Director should be.

Those conversations have already shifted the development culture to be more inclusive of research. Even at this stage of change, stakeholders now lead presentations with research, which historically would have been perceived as wordy or controversial and hidden in footnotes or an appendix. Research is starting conversations, and that is a good thing.

DISCUSSION: REFLECTIONS OF THE FUTURE OF UX RESEARCH

In our work, we’ve seen Salesforce move from a fixing bugs mindset toward a building businesses mindset. We’ve been through the excavation and foundation phases, and Salesforce researchers are constructing a broader portfolio of research as we write this. That said, our path leaves open several questions for the future of UX research as a field. In this section, we address two of them: the purpose of ethnographic praxis and the tension between broadening research efforts and respecting user privacy.

Let’s start with the purpose of ethnographic praxis. When the Salesforce UX research team began conducting ethnographic studies, product managers and other stakeholders loved the color and attention to detail that ethnographic observations can provide. To this day, our researchers are still getting requests for day in the life studies and details about how, when, and where our users do their work. However, these requests emphasize bits and pieces of information to the exclusion of broader understandings of our users and product implications of those understandings. In other words, they are requests for raw data rather than ethnographic synthesis. So we have built only the beginning of ethnographic praxis—because, as Sam Ladner (2014) says, ethnographic data is useless in practical terms without theory, which helps ethnographers and their stakeholders make sense of the data in terms that matter for the organization.

Next, in our organization, we have noticed a tension between broadening research efforts and respecting user privacy. We know from lean methodology that rigorous testing through innovation accounting can provide valuable feedback loops about whether a team is moving in the right direction. That rigor is sometimes at odds with end user privacy. This is a particular concern at Salesforce, where we are a bank of banks. We hold sensitive user data for financial and health institutions, among others, and we take our user privacy very seriously. Hence we have very superficial ways of understanding cohorts of users. New hires from other companies are often incredulous when they ask, “What industries (or skill levels of users) use that feature?” and hear, “That isn’t instrumented—it’s a privacy issue” in response.

CONCLUSION: BUILDING THE FOUNDATIONAL BRIDGE

Bridges allow organizations to become, at least in Tsoukas and Chia’s sense. If organizations are emergent properties of dynamic interactions between people, each of those dyadic relationships can be seen as a mini-bridge. Further, the road between any organizational starting point and ending point can be seen as a suspension bridge. The mini-bridges of stakeholder management give rise to the suspension bridge that takes an organization from a fixing bugs research mindset to a building businesses research mindset. Values and beliefs change in mini-bridges, in individual conversations and interactions between pairs or small groups of actors. But those value and belief changes cannot take hold until there is a top-down directive from executives integrating the new values and beliefs—and providing guidance on how those translate into behaviors. Google used to emphasize minimalism, simplicity, and good code at the expense of design. Later on, after hundreds if not thousands of interactions between employees on the topic, Larry Page emphasized that Google also values design and good user experience, which might sometimes mean animation and cohesive color schemes. The next step in Salesforce’s organizational becoming is for our most senior executives to similarly define how we value design in our organization as a means of approving of and solidifying grassroots change efforts. The recently released Lightning style guide, for example, defines design excellence at the style level and at the form, feature, and function level company-wide. The style guide eliminates the need for many usability studies, which frees researchers to move up the design maturity stage levels to problem framing and beyond.

Kirsten Bandyopadhyay is a user experience researcher based in San Francisco. An ethnographer and a survey researcher obsessed with R, they use data and empathy to make technology experiences better. They completed their PhD in science and technology policy at the Georgia Institute of Technology. hello@kirstenbandyopadhyay.com

Rebecca Buck is a UX researcher and strategist focusing on research methods for new product development. Her experience spans diverse industries including public health, pharma, education, insurance, consumer packaged goods and tech. She currently leads the UX research team for the IoT Cloud at Salesforce. rbuck@salesforce.com

NOTES

Acknowledgments – We would like to thank all of our fellow researchers and stakeholders at Salesforce for working with us on organizational change. Of course, this work remains our own and does not represent the official position of Salesforce or any other company. We would also like to thank our EPIC reviewers for valuable comments. Any errors remain our own.

REFERENCES CITED

Armenakis, Achilles A. and Arthur G. Bedeian

1999 “Organizational Change: A Review of Theory and Research in the 190s.” Journal of Management 25(3): 293-315.

Chan, Mabel

2015 “Same Findings, Different Story, Greater Impact: A Case Study in Communicating Research.” Blog post for EPIC 2015 Perspectives published September 10, 2015, online at https://www.epicpeople.org/case-study-in-communication-design/

Holson, Laura M.

2009 “Putting a Bolder Face on Google.” New York Times. Online at http://www.nytimes.com/2009/03/01/business/01marissa.html

Klein, Laura

2013 UX for Lean Startups: Faster, Smarter User Experience Research and Design. Sebastapol, CA: O’Reilly.

Kuang, Cliff

2015a “Why Airbnb’s New Head of Design Believes ‘Design-Led’ Companies Don’t Work.” Wired 2015-01-30, online at http://www.wired.com/2015/01/airbnbs-new-head-design-believes-design-led-companies-dont-work/ .

2015b “How Google Finally Got Design.” Fast Company 2015-06-01, online at http://www.fastcodesign.com/3046512/how-google-finally-got-design

Ladner, Sam

2014 Practical Ethnography: A Guide to Doing Ethnography in the Private Sector. Walnut Creek, CA: Left Coast Press.

Poincaré, Henri

1914 Science and Method. New York: T. Nelson and Sons. Translated by Francis Maitland.

Ries, Eric

2011 The Lean Startup: How Today’s Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses. New York: Crown Business.

Schmiedgen, Jan

2013 “Design Thinking Boot Camp: Selected Slides for a Typical Professioanl Training.” Published on SlideShare at http://www.slideshare.net/janschmiedgen/design-thinkingbootcamp

Tsoukas, Haridimos and Robert Chia

2002 “On Organizational Becoming: Rethinking Organizational Change.” Organization Science 13(5): 567-582.

Tullis, Tom and Bill Albert

2013 Measuring the User Experience: Collecting, Analyzing, and Presenting Usability Metrics. Waltham, MA: Morgan Kaufmann.

Wu, Rosa and Jess McMullin

2006 “Investing in Design: To Build Business Buy-in, Designers Need to Buy in to Business.” Ambidextrous Magazine 2 (Nearly Winter 2006): 33-35. http://ambidextrousmag.org/issues/02/pdf/pages33-35.pdf