Today I turned left out of London Bridge station. I usually turn right and take the Tube but instead I went in the other direction and took the bus. I can’t explain why I did that.

Perhaps I was responding to a barely discernible change in crowd density or the fact that it was a bit warm today and I didn’t want to ride the Tube. Either way, I was trusting instincts that I am not able to translate into words.

Often when I travel around London I reach for the CityMapper app. I rely on it to tell me how best to get from A to Z but I don’t really know how it makes the recommendations it does. Likely it has access to information about the performance of the Tube today or real time knowledge of snarl-ups on London’s medieval roads. It’s clever and I love it. It knows more than I do about these things and what to do about them.

The workings of CityMapper are a mystery to me—but so are the workings of my brain. Even if I had a sophisticated understanding of neuroscience, physiology and chemistry, I’d just put many of navigation decisions down to the very human qualities of experience, instinct or intuition.

By contrast, I’m often sceptical of “black box” systems. I want to know if the owners of a nearby shopping centre paid CityMapper to turn me into ‘footfall’ for their mall. But whether I know a little about these inputs—or even a lot—when I use the app I’m putting my faith in something I can’t hope to understand.

How important is it to understand how CityMapper decides on my route? Are the suggestions it gives any less inscrutable than those of passers-by I stop in the street without knowing much about their reasoning or level of knowledge? Is blind faith in strangers and in CityMapper broadly symmetrical?

This article probes our understandings and expectations of machine versus human intelligence. And it explores what these different standards say about our developing relationship with non-human forms of intelligence. It ends with a suggestion for how we might self-confidently differentiate our intelligence from non-human forms.

Intuition and Intelligence

Turning left at London Bridge station I asked: Does ‘artificial intelligence’ need to be as open and explicable as human actors? Is that even possible? Is human intelligence itself really explicable?

Consider these two films:

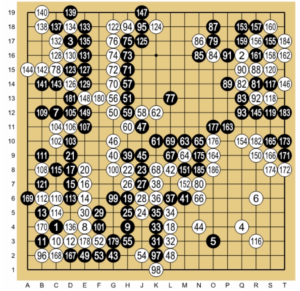

First, the spellbinding scene in the Netflix film about Deep Mind’s AlphaGo. Match commentators and engineers alike gasped when, at move 102 in the first game, AlphaGo makes a stunning move against Lee Sedol. It’s shocking because it’s unexpected, a move a human might never have made and one drawn from an understanding of the game completely different from those reached by human players over Go’s two-thousand-year-old history. It was described by commentators as ‘super human’.

First, the spellbinding scene in the Netflix film about Deep Mind’s AlphaGo. Match commentators and engineers alike gasped when, at move 102 in the first game, AlphaGo makes a stunning move against Lee Sedol. It’s shocking because it’s unexpected, a move a human might never have made and one drawn from an understanding of the game completely different from those reached by human players over Go’s two-thousand-year-old history. It was described by commentators as ‘super human’.

Many commentators reacted to this ‘super human’ move with a sense of unease but I was entranced. Neural nets ‘teach’ themselves—in the case of chess or Go they are just told the rules of the game—and because we can’t understand how they ‘think’, these systems are often regarded with fear. But is unintelligibility an appropriate basis for fear?

Now consider a second film on Netflix about chess player Magnus Carlsen. It’s entrancing for a rather different reason. Carlsen is a really intuitive player. He makes moves quickly. He acts on feeling. He is a virtuoso in the Dreyfussian sense. And when he beats then–World Champion Vishy Anand, he seems to be running on instinct more than anything else. (Recently he repeated the same virtuosic, fluid performance in his victory in the time-limited tie breaker against Fabiano Caruana in London.)

Now consider a second film on Netflix about chess player Magnus Carlsen. It’s entrancing for a rather different reason. Carlsen is a really intuitive player. He makes moves quickly. He acts on feeling. He is a virtuoso in the Dreyfussian sense. And when he beats then–World Champion Vishy Anand, he seems to be running on instinct more than anything else. (Recently he repeated the same virtuosic, fluid performance in his victory in the time-limited tie breaker against Fabiano Caruana in London.)

The workings of Carlsen’s brain are no less mysterious and awe inspiring than AlphaGo, but notably, don’t inspire fear or urgent demands to know exactly how it functions.

I listen to my prodigiously musical eleven-year-old nephew playing a Schubert Impromptu and struggle to understand how his brain and body combine to make the piano sound so beautiful. I wonder at the awesome gap between the ultimately simplistic aural stimulus I receive and the depth of my emotional response to it.

Much separates AlphaGo, Magnus Carlen and my nephew, but what unites them is the illegibility—the mystery—of their brilliance.

Faith, Visibility and Transparency in Design

In his recent book New Dark Age: Technology and the End of the Future, one of James Bridle’s dominant metaphors is visibility. The book is an extended exploration of what he regards as our “inability to perceive the wider networked effects of individual and corporate actions accelerated by opaque, technologically augmented complexity” (131).

Reading Bridle I couldn’t help but ask myself, “What’s new?” Isn’t a defining feature of the world, and one of the sublime things about it too, that it doesn’t give up freely of its mysteries? Bridle wants us to revel in this Dark Age—to accept that there’s much we cannot know. But his work is a polemic. He reiterates throughout that this opacity is deeply political. Illegibility obscures the mechanics of power. His book sums up, in extremis, the emerging view that intelligence we don’t understand is malevolent.

In key ways his argument and position are important: power and politics are clearly at the heart of our algorithmic unease. Activists, researchers, scholars and organizations like Data & Society are tackling these critical issues.

But my focus here is different: I want to explore the common ground between human and non-human intelligence. We share illegible intelligence. We also share skills and talents—but non-human intelligence worries us because it is good at things we feel are specifically human. So I want to explore the ways this common ground can become a source of difference and an opportunity for competitive differentiation between artificial and human intelligence.

Common Ground, Co-Workers and Competitive Advantage

New technology has always raised the spectre that we’ve invented machines that are encroaching on the things that elevated humans as distinct from the rest of the animal kingdom. Humans are brilliant at logical analysis; the rise of computers has dovetailed with a growing obsession for analytical thinking (is it cause, effect, or correlation?). The hegemony of big data, computational thinking and the fetishization of coding and STEM subjects has the curious effect of magnifying the ‘threats’ that computing presents to our species’ former dominance in the domain of logical analysis.

So we could say we’ve developed technology that does one of the things we are good at—but of course that’s far from the whole story. As Brian Christian points out, computers can drive cars and guide missiles, but they can’t ride bikes. Or, as Hans Moravec noted, “it is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility”. As we’ve upgraded our devotion to computing and data and associated ways of thinking—so-called left brain and analytical—we’ve downgraded the value of intelligence that we derive from our instinct, feelings and senses. We’re all about singing the praises of disembodied cognition.

It’s time for the pendulum to swing back the other way. Time to acknowledge that the common ground we inhabit with our creations means we should focus on what we can do and they can’t—find the moral equivalents of bicycles in the age of autonomous vehicles.

In a sense we already are: the re-emergence of artisanal occupations charted by Richard Ocejo in Masters of Craft: Old Jobs in the New Urban Economy speaks to the fact that making fine coffee or furniture are things that embodied intelligence excels at. Matthew Crawford makes the same point in Shop Class as Soulcraft: An Inquiry into the Value of Work. Aping computers and robots by having people read customer service scripts in a call centre is not playing to our species’ strengths.

A Slow Road

We’re slowly developing the literacy that will ease our discomfort with artificial intelligence. But we also need a boost of self-confidence. One way to feel better about the emergence of incomprehensible algorithmic system is to think of them as being, like human intelligence, incomprehensible. Remember, despite the advances of science, we don’t understand human intelligence and consciousness.

Another, more positive and effective strategy, will be to move beyond the sense of threat that comes from similarity and make the case for points of difference. Let algorithms do what they are best, or better at, and let us stick to what makes us special. We can be co-workers* who build off each other’s strengths; accept that computers are brilliant at many things, and faster too, and stick to what we can do best—then work in partnership.

More than that let’s celebrate our difference. Let algorithms, both clever and clunky ones, remind us of our brilliance. Of all they can’t do and all we can.

* I’m indebted to ken anderson for sharing this framing.

PHOTOS: 1. London Bridge Station by Simon Roberts; 2. Lee Sedol (B) vs AlphaGo (W) – Game 1 by Wesalius via Wikimedia; 3. Magnus Carlsen by Intel Free Press via Wikimedia

REFERENCES

AlphaGo https://www.imdb.com/title/tt6700846/

Bridle, James. 2018.New Dark Age: Technology and the End of the Future. London: Verso.

Crawford, Matthew. 2009. Shop Class as Soulcraft: An Inquiry into the Value of Work. Penguin.

Christian, Brian. 2009.The Most Human Human: What Artificial Intelligence Teaches Us About Being Alive. Penguin.

Dreyfus, H. 1984. Mind over Machine: The Power of Human Intuition and Expertise in the Era of the Computer. New York: The Free Press.

Magnus. https://www.imdb.com/title/tt5471480/

Related

Have We Lost Our Anthropological Imagination?, Sakari Tamminen

Speculating about Autonomous Futures, Melissa Cefkin & Erik Stayton

A Researcher’s Perspective on People Who Build with AI, Ellen Kolstø

0 Comments