This paper presents ethnographic studies of hard of hearing (HoH) users that had significant impacts on the wireless technology, business planning, and executive teams of a major global computing company. It explores the complex, kluged, multi-device systems that hard of hearing (HoH) users must grapple with when trying to connect their hearing aids to their PCs. We argue that these systems can be modeled as a physical apparatus made up of many forces causing drag or friction in the interaction between assistive devices, people with disabilities, and computers. Our fieldwork covers three related research studies and a total of 22 in-depth remote interviews plus contextual sensory media data collected through Dscout, an end-to-end mobile ethnography platform, with hearing aid users. We provide examples of environmental limitations and technical difficulties of multi-device pairing and switching, along with personal details of life, work, recreation, and socializing that dictate particular use cases. We also discuss the interpersonal, environmental, and technical factors that had to align at an organizational level in order for this research to occur, before finishing with the significant organizational outcomes of these studies.

THE PROBLEM: “PARTICIPANT” ZERO

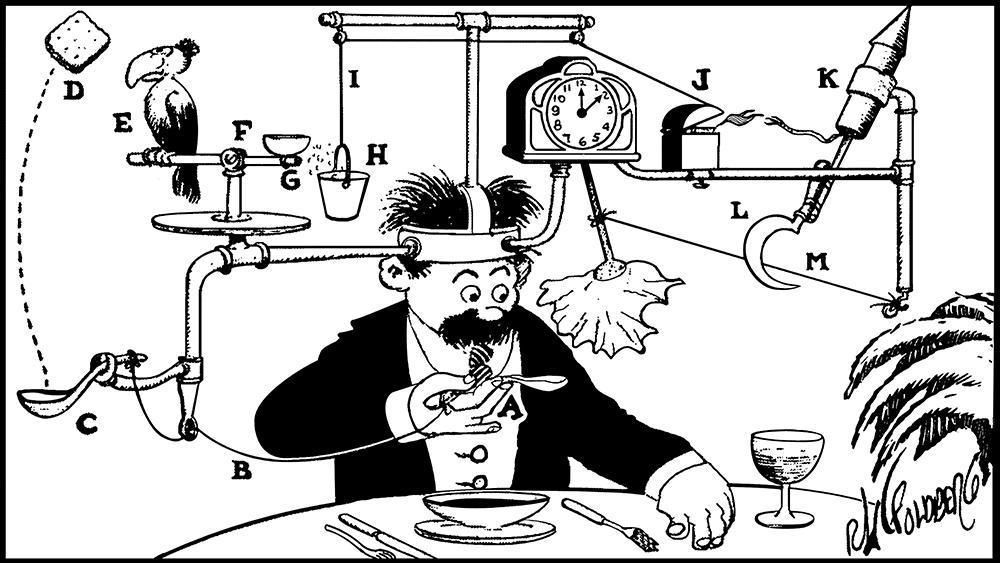

In some ways, this project all started with one colleague, our so-called “participant zero,” who inspired us to consider hearing aids and PCs in new ways. The experiences of this deeply frustrated hard of hearing (HoH) colleague inspired the research thrust focusing on accessibility, and specifically on the issue of helping computer users who wear hearing aids. During the lockdown stage of the pandemic, when everyone worked from home and interacted with colleagues solely through the PC or phone, participant zero, our senior colleague, shared his frustrations with remote meetings, and especially remote audio. He explained that his Bluetooth-enabled hearing aids could connect easily with a phone but could not connect directly with a PC. As a technologist at Intel, he was able to kluge together a daisy chain of peripherals that worked but was cumbersome. He used a docking station connected to a dual audio jack switcher which was connected to a streaming device that transmitted sound to his hearing aids. A jumble of cables kept everything hooked together. In complexity, it was reminiscent of a marble guided to a target that causes an arrow to be released and push over a piece of paper that frees a golf ball that falls and bounces to hit the power button of a laptop. In the many interviews we subsequently conducted with people who wear hearing aids, we never met anyone else with a similar setup; he had found a novel way to address his problem. Most of our participants were not aware of any solution. His solution did work. However, it was expensive, unintuitive, and took up valuable space on his desk. While not practical or reproducible for most HoH computer users, his extreme example was inspiring for an assortment of multi-disciplinary colleagues across the business unit including a group of user experience designers and ethnographers that pursued a program to better understand the experiences of HoH computer users. That research team later collaborated with audio technologists to refine a product that will greatly improve the experience of HoH computer users.

BACKGROUND: ORGANIZATIONAL PRECURSORS

In other ways, the story started long before the COVID-19 pandemic made remote meetings a challenge for our HoH colleague.

In the past, accessibility-related work in the Intel business units had been sparse and fragmented. At the level of the corporation, there were social responsibility goals that addressed accessibility and disability inclusion, primarily with the aim of increasing the percentage of employees who self-identify as disabled. In 2021, the corporate Accessibility Program Office added a new full-time inclusive design operations program manager role, and she began to drive the adoption of inclusive design and research processes in the product space. During this time, the Accessibility Program Office had been working hard to make in-roads into the business units. These and several other factors led to the creation, within the laptop division, of an accessibility working group led by one of the authors. This working group subsequently launched a series of user experience research efforts.

Around the same time, there were also innovation campaigns around the corporation, many of which propelled accessibility work forward. Improving the way that hearing aids interact with PCs (from our frustrated engineer colleague) was the winning idea in the Wireless Innovation Campaign, which sought novel product ideas from employees across Intel. Subsequently, the Accessibility Innovation Campaign in 2022 sought accessibility-related product ideas. It increased awareness further and resulted in three winning product ideas. Elsewhere in the company, colleagues in Intel Labs, a research division, had been working on accessibility-related innovations for years (e.g., Blankinship & Beckwith 2001; Denman, Nachman, and Prasad 2016). Accessibility within Intel goes at least as far back as 1997 when Gordon Moore, co-founder of Intel, took a particular interest in creating customized wheelchair-mounted systems for Stephen Hawking, including his speech synthesizer (Medeiros 2015) and switch-scanning editor, an open-source version of which is still being updated and distributed by Intel Labs (ACAT 2023). Since then, accessibility has been a small but varied topic of research within Intel Labs, and it meant that our colleagues there were eager to help integrate their accessibility knowledge into the laptop business unit in order to impact product definition.

Meanwhile, a wireless communication team in the business unit was working on a new version of Bluetooth with the goal to improve audio experiences. Their focus was primarily headphones and earbuds, but through a series of meetings and introductions, the wireless team and the accessibility research team found each other and started working toward a common goal: to convince the corporation that we should all work to improve the experience of computer users who wear hearing aids.

THEORY: DISABILITY, FUNCTIONING, AND THE FRICTION MODEL

Historical work on “cures for deafness” (Virdi 2020) suggests interesting considerations for how to frame “friction” in the context of our research into the technology of hearing aids and the people who would wear them. This history includes a long line of supposed “cures” that have been offered to and adopted by those with significant hearing loss. However, these cures were simply not capable of doing what their purveyors claimed. Even latter-day electronic devices (like those of our colleague) fail to “cure” and often merely frustrate. In speaking of these, Virdi reminds us that:

Those who purchased an electronic device were not acting out of ignorance, but were seizing an opportunity. They were not embarking on an unreasonable and irrational path to health, but strolling along a resourceful trail of health care.

(p.124)

More than just a history of deafness “cures,” Virdi’s book also offers a look at the pathologizing of deafness. The long history of cures that have been offered demonstrates that deafness has often been considered a problem that one must solve. The electronic devices encountered and purchased on this “trail of health care”, then, are often a species of “technology solutionism” (Morozov 2013), especially when they are assumed to be a cure for deafness. Here, we should turn to Virdi as she, herself, has profound hearing loss. Her work drives home the point that, whether they are effective or not, technologies that don’t respect one’s sense of self are not solutions at all and so many of these technologies are not even effective. Thus, Virdi’s “resourceful trail” is fraught with disappointment. Respect for the sense of self and consideration of the paths people take suggests another way to consider our current work and one way in which we think of friction.

The philosopher John Macmurray (1957) was a proponent of “I do therefore I am.” He talked about how our sense of self comes from our engagement with the world, from how we move through the world exploring, testing, and learning. At its core, Macmurray saw life and self as perception in action and he saw the resistance to action as “the Other” – that which is not “me”. According to Macmurry, resistance—or we could label it friction—allows me to learn about myself and the world, where I end and the Other begins, and properties of both.

More recently, an anthropologist strongly influenced by Macmurray’s work, Tim Ingold, has made the connection between self and friction more explicit (2010). Like both Virdi and Macmurray before her, Ingold uses the metaphor of strolling in a world full of experiences and resources. Ingold says that as we take this metaphorical walk and experience the ground and the air, our minds extend into them and, inevitably, “tangle with the minds of fellow inhabitants.” Ingold tells us that friction is necessary; that it holds everything together while at the same time revealing unity of the parts. For example, in considering a basket weaver, Ingold says the “maker is caught between the anticipatory reach of the imagination and the frictional drag of materials” (2012). So, as we wend our way along paths through the world, it’s the frictions that we experience and make use of that determine not only what we know of the world but also who we are.

Coming back to the sense of self, Virdi makes clear that there is a unique sense of self among the Deaf community. Many people, especially those with profound hearing loss, consider themselves members of the Deaf community, agreeing with Virdi that Deafness is not a “disease” that forces one to have a sense of self that is “less than.” The identification with, what has been called, “the big-d Deaf community” is invariably a point of pride and has led many to now refer to the community overall as the D/deaf community. It would be wrong to think that any technology is necessary to fix who they are. Hearing loss might define what they hear and, perhaps, who they interact with and how they interact, but it’s defining powers don’t need to extend to their sense of who they are.

Similar to this notion in the D/deaf community, our hearing aid wearing participants seemed to describe their experiences as having hearing loss or their hearing as being a disability even if they didn’t feel comfortable with calling themselves “disabled.” At the same time, there are acoustic phenomena in the world that are used by other inhabitants that many people with hearing loss cannot experience without some assistance. We can consider our work with technologies for hearing not as a cure for deafness but rather as an adjunct to the experience of deafness that would allow people to move through the world experiencing some of these unavailable phenomena – embodied in these frictions – should they choose to. However, most importantly, these technologies themselves, like all others, offer a second kind of friction that we must also consider.

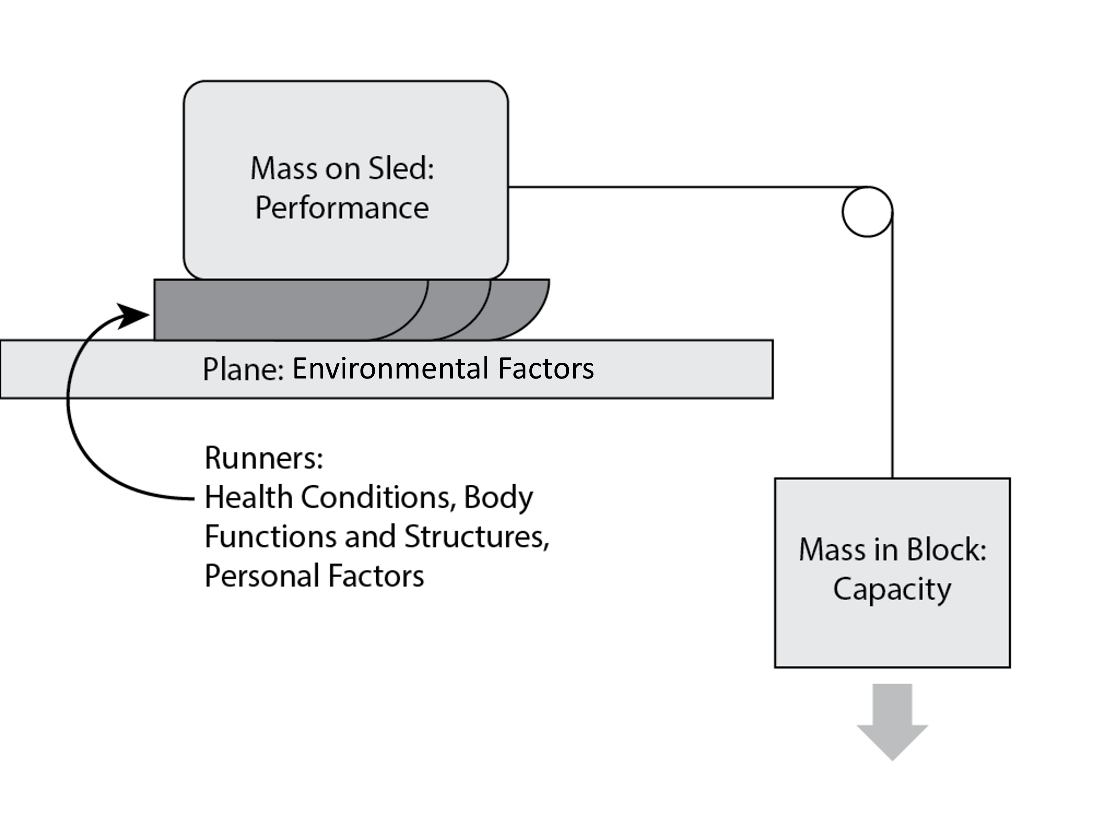

Borg et al.’s Friction Model (Borg et al. 2010) is a tool for considering the ways that different factors in people’s lives (from health conditions to environment to personal identities) impact what is possible for people with disabilities to do at any given moment. Using a physics-based metaphor, the authors model ability and functioning in social and physical space with the forces of weight, friction, and resistance. They suggest thinking of a system that, essentially, pulls an object across a table. The system consists of a sled on a tabletop that is attached to a rope that leads over the edge of the table and suspends a bucket (see Figure 1). The rope can drag the sled across the table via the downward pull of gravity on the mass in the bucket. These items themselves are seen as massless and frictionless, so if one were to place any mass in the bucket but none on the sled, the rope would pull the sled. In the Friction Model, the properties of the sled, bucket, and tabletop are defined by building blocks taken from the WHO’s International Classification of Functioning, Disability, and Health (ICF). Mass in the bucket increases the force with which the rope pulls the sled. Mass on the sled increases the friction on the runners of the sled. The runners themselves can have more or less friction depending on their properties. The lower the friction, the less mass required to move the sled. Only when we consider all of the variables can we start to ask whether the system will function as it should.

A person’s overall capacity for action (their ability to complete actions or tasks) is the weight in the bucket which provides tension on the rope. The person’s performance (the movement of the sled/what the person wants to do) is based on the level of friction that must be surmounted by the weight in the bucket. Mediating the rope’s tension are the coefficients of friction, which Borg et al. group into three categories each represented by one runner on the sled: health conditions (diseases, disorders, injuries, traumas, etc.), body functions and structures (physical, psychological, and anatomical elements of an individual body and mind), and personal factors (such as gender, race, age, or any other detail of an individual’s life background). And all of these elements move across the table which contributes to the coefficient of friction through the environmental factors (i.e., the physical, social, and attitudinal environment in which people live).

Important takeaways from the Friction Model include that disability is not inherent to any given physical condition (as you might expect from the medical model of disability) but neither is it entirely unconnected to physical functioning (as a purely social model of disability might suggest). Instead, many factors play a role in an individual’s ability—or lack thereof, i.e., disability—in every given moment of interaction. Additionally, it is important to take seriously this concept of friction and resistance as forces that can lead to disablement or disability. Disability is not a static feature of a person or condition. Neither is it felt as a consistent pressure in all areas of life. Disability is something that anyone can experience to some degree because it exists in the moment in which all the elements that make up our lives do not move smoothly against each other. Instead, they catch and drag and heat up. Someone with a disability may experience more of these moments of friction than a non-disabled person might, but even then, the different degrees and ways in which elements of one’s life do not move smoothly vary greatly depending on multiple factors.

Deaf communities, particularly those who identify with and coalesce around a shared signed language, often do not label themselves “disabled.” Other people with hearing loss—even those who are “mainstreamed” and forced to engage on the biased footing of hearing society—may not identify with the label “disabled” or “person with a disability;” others may. Our participants, all hearing aid users, seemed to view their hearing loss as an impairment, a complication in day-to-day functioning that they would generally prefer not to have to deal with, thus we follow their lead and refer to their hearing loss as a disability. However, because all our participants are HoH and hearing aid users, they do not and should not be taken to represent the opinions of D/deaf people generally.

In fact, that’s something that any theory or theorizing around disability has to contend with: the simultaneous distinctness of people with disabilities (as a marginalized group of people in an ableist world) and the complicated “sliding scale” nature of disability. For example, within the EPIC community, few works have discussed people with disabilities (Weinstein 2019, Harple et al. 2013). Research on remote meetings or the future of work (Thomas et al. 2022, Aiken and Ramer 2020) have important ramifications for people with disabilities and yet the ways that (in)accessibility impacts and is impacted by all users and situational factors has not generally been addressed. Even within generalized models of how friction functions in human culture or computing (Ash et al. 2010; Tsing 2011) the particular way that the same general frictions can be amplified and multiplied for HoH and other disabled users have not been dealt with. The primary takeaway is that no single experience of disability or friction is unique—people without disabilities deal with the same kinds of problems all the time, with headphones and Bluetooth connectivity and similar technical frictions—however people with disabilities have these daily difficulties compounded by inaccessibility, stigma, and social exclusion.

METHODS: PARTICIPANTS AND DATA

Methodological Advantages in Disguise

The three research projects that make up this paper’s dataset were conducted during a period of either Covid or budgetary travel restrictions or both. Two studies used Zoom and Teams to conduct in-depth interviews. One study was conducted using the Dscout remote research tool. For all the studies, the remote nature of the research allowed for a broader recruitment of participants, and meant participants could call in from cities across the U.S. The team was not limited by resource constraints to engaging with participants in only one or two cities. While there are obvious drawbacks to using remote research methods, there were also some advantages to speaking with HoH people about their experiences using computers while we were limited to communicating through computers. Remote interviews allowed the team to witness first-hand some of the very big frustrations the participants had with remote communication. The research team quickly learned that it was helpful to participants if they could clearly see our lips as we spoke. In addition, some participants relied heavily on the simultaneous captions provided in Zoom or Teams. Many participants joined the interviews using their phone for the audio portion because it could pair directly with their hearing aids, and they used their computer screen to see our faces and read lips.

The combination of phone and PC occasionally posed some problems, for instance when the research team experienced loud feedback from a participant named Craig1 and tried to mute him when he wasn’t speaking. This solved the feedback issue, but he could not unmute himself so twenty minutes of the interview were spent troubleshooting audio which was ultimately resolved by him joining the call from a different PC. Craig relied heavily on captions and needed to read our questions before answering. It was a slower cadence, and we learned to pause after asking a question so that he could catch up to the captions, which were always several seconds behind. It was tolerable for us in our interview setting but would obviously be a challenge for him during remote meetings when work colleagues speak quickly and sometimes more than one person speaks at a time. When we spoke with Victor, an ASL translator, they were in a school lobby area and all the background noise was amplified on our end because of the built-in microphone on Victor’s hearing aids. It was an extremely challenging way to interview someone and would have been the same for anyone trying to chat with Victor by phone. A participant named Monica is both a quadriplegic and has severe congenital hearing loss. She has a host of difficulties with her hearing aids and her technology set-up. She had a new computer and was unable to pair her hearing aids to it. She relies heavily on captions, whether she is paired or not, but she could not join our interview from her computer in order to see them there, and her home health aide was not able to help. She ended up using the phone for our interview, but she was not able to hold the phone up so that she could see the captions. It was a challenging conversation. However, what these challenges gave us, in practice, was an in-depth view of the experience hearing aid users had with their computers and technology setups that mimicked other observational or participatory methods involving people with disabilities (Paluch et al. 2017; Weinstein 2019).

The Three Studies

The first study aimed to better understand the daily practices of people who wear hearing aids, with an emphasis on learning about their experience using audio on computers, especially the process of pairing hearing aids with a laptop. Working with an outside agency, the team recruited eight participants of different ages who wear hearing aids, and who also used or wanted to use computer audio in their activities. In addition, the study included interviews with two audiologists, experts who help prevent, diagnose, and treat hearing and balance disorders, and are the primary conduit for accessing custom fit hearing aids. The team found audiologists with experience helping their clients pair hearing aids to various devices, including computers.

Of the eight people in the study, seven paired their hearing aids directly to their phone using Bluetooth. Only one paired his hearing aids directly to his computer (he was a retired IT professional). Two people paired to their computers using intermediary adapters. Another participant was inspired by her participation in the study to reach out to her audiologist and bought an intermediary device that worked for pairing with her MacBook. Victor, the ASL translator, could connect to Apple computers at their workplace but was not able to connect to their own Windows PC at home. One person was not able to pair her hearing aids with her computer at all, even with an intermediary device, and several had never tried. Monica told us, “I plan to physically bring the audiologist all my devices this time so she can pair them, except my TV, obviously.”

The second study involved follow-up interviews with six of the hearing aid users interviewed in the first study. These follow-ups both built on our understanding of their experiences from the first study, as well as being part of a larger study on the topic of situational or environmental awareness and sensor monitoring to support people with hearing loss or low vision. As such, in the follow-up interviews, conducted by the first author, we discussed their current degree of awareness or worry around missing social, emergency, or other audio cues while their hearing aids were connected to their devices. We also discussed what sorts of sounds they would ideally like to be alerted to when at their computer and how they would like these alerts to be communicated. This transitioned into talking about the potential ability of the computer to provide additional audio filtering or enhancements and what their opinions were on that. Finally, we discussed any general or security concerns they might have around devices monitoring or processing the sounds happening around them.

The next study was conducted via a remote research platform called Dscout. Seven participants were recruited to participate in a diary study and follow up interviews. The diary study pinged participants over the course of a week and asked them to reflect on various experiences with their hearing aids and computers. The interviews gathered feedback on four use cases that presented solutions to common problems with hearing aids as identified in the earlier study.

There were a minimum of four diary entries for participants to complete: an introduction to the pairing process (where they showed their attempt to pair their hearing aids to their PC), a snapshot of work in the morning, a snapshot of work in the afternoon, and a reflection at the conclusion of the work week. Each entry consisted of a few questions (Where are you? What software are you using? etc.) and a one-minute video to talk through a challenge from that day.

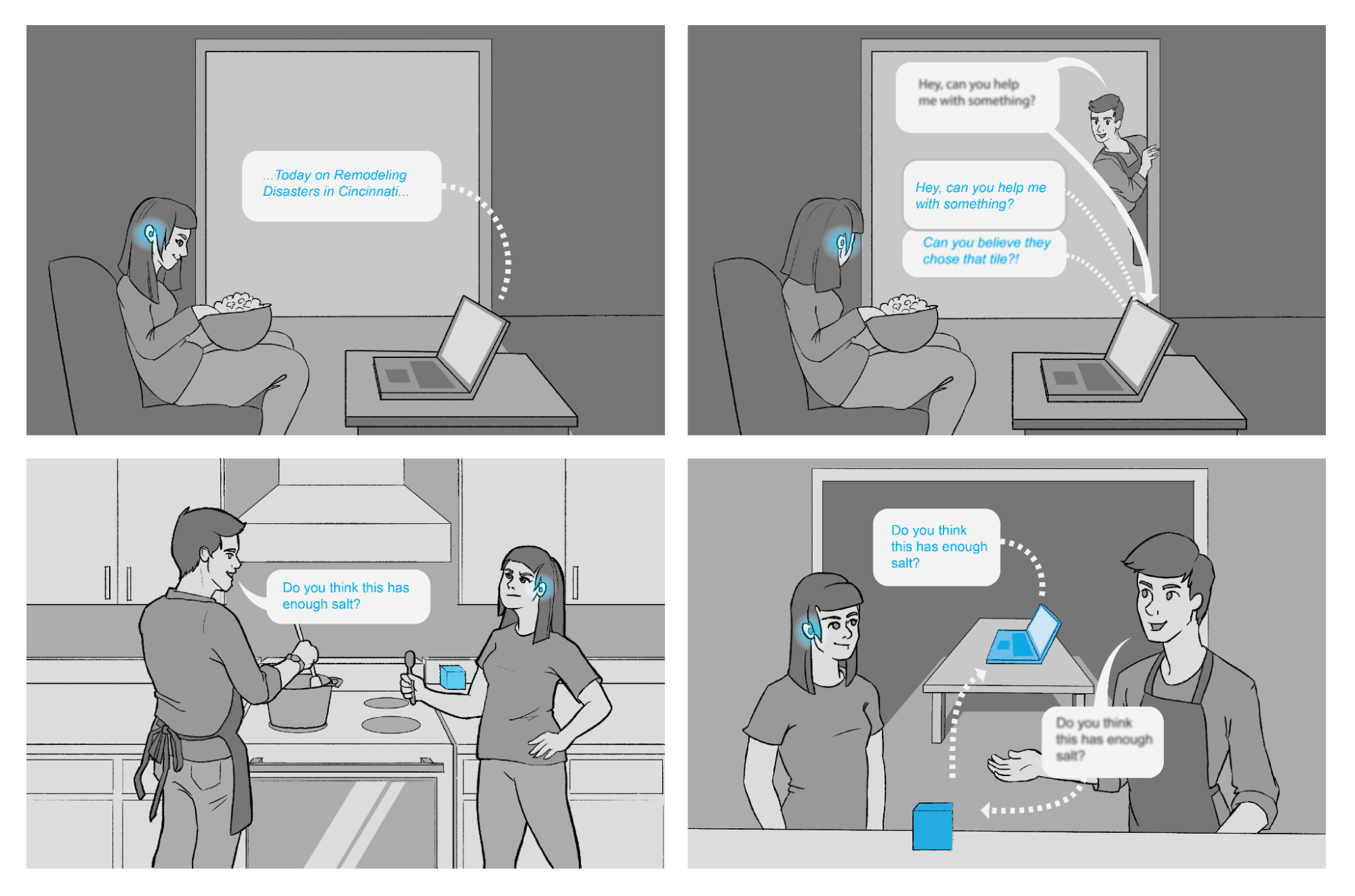

After completing the diary entries, participants were interviewed individually. After a brief introduction, we followed up on interesting or unique details from the diary entries. We then solicited feedback on solutions to four key problem areas: Pairing hearing aids to a PC, managing and switching between multiple paired devices, balancing multiple audio channels being streamed simultaneously to hearing aids (ie, ambient sounds along with audio from a PC), and improved audio processing by leveraging a PC processor. Each solution was presented in the form of storyboards (see Figure 2). We would first try to understand the user’s current solution to this problem, then present our improved experience. Then users would rank each concept on a five-point Likert scale for both their personal need and general usefulness to the HoH population. Feedback was generally positive, with pairing and switching devices identified as the most helpful solutions.

FIELDWORK: PARTICIPANT PROFILES AND ETHNOGRAPHIC DATA

To begin this section, let us introduce in more detail some of the participants from our studies. These three profiles discuss the details of each participant’s environment, hearing needs, and life experiences to demonstrate the variety in our participants and their particular frictions.

Robin is a 40-year-old gamer, streamer, and artist with asymmetrical moderate hearing loss. At the time of our study, she was unemployed, but typically she works in an office environment doing tech support. Robin’s preference is to work remotely in order to avoid the audio cacophony of most office environments. At home, her hobbies center around a PC she built. While she can’t afford the latest and greatest hearing aids, Robin is quite tech savvy and makes the best with what she has. There are some capabilities on her hearing aid she does not utilize on account of complexity and unreliability, but she has built an ecosystem of devices that let her stream audio from multiple devices. It is workable, but not perfect.

Craig is an engineer in his late fifties with severe hearing loss that began in childhood. He often relies on friends and family to assist with interactions with strangers such as a pharmacist or cashier. While hearing aids are an incredibly important tool for Craig, their shortcomings both compound existing difficulties and cause a lot of new frustration themselves. At work, Craig sits in a cubicle. When focused on his computer screen or desk, he cannot hear coworkers approach and is startled when they get his attention. In conversation, he regularly needs to interrupt people and cue them to speak louder or lean in closer.

Patricia is an audiologist who has run a private practice for 20+ years and uses hearing aids herself. Much of her patient care revolves around how to use hearing aids. To help them find the best hearing aid for their needs, Patricia learns what they do for work and their broader lifestyle. She says that patients will sometimes say of one audio source or another “Oh, I don’t have to hear that!” These tend to be older folks, half with no phone or who think the phone is too complicated. She has them compile a wish list of features and budget, then does her best to set them up with a device that will fit their custom needs. The patient’s comfort with tech is part of her decision-making process. At times, Patricia works with HR and IT to help support her patients at work. She also regularly contacts the hearing aid manufacturers for troubleshooting. In general, Patricia encourages her patients to connect their hearing aids directly to an audio source for the best quality even if that means using an intermediary device.

In addition to these broad overviews, we would like to give some example frictions from Patricia, Robin, and Craig, as well as other participants, to demonstrate the ways the general friction forces described in the Borg et al. (2010) model in practice fall into one of four categories: technical frictions, environmental frictions, social frictions, and personal frictions.

The environmental and social factors of Craig’s job overlap in ways that affect how he communicates with others. As an engineer, he works in an often-noisy assembly environment which requires him to get closer to people to hear them,

“As people work with me, they understand ‘he can’t hear well’ so most of them adjust and it goes well with colleagues providing accommodations to compensate for my hearing. Every now and then there’ll be someone that won’t speak up and they just don’t adjust so I will ask somebody next to me, ‘What did she say? What did he say?’ And they’ll repeat it for me. The funny thing is they still don’t get it. They’ll [still] talk to me and they won’t speak up.”

On the other end of the spectrum, some people in Craig’s work environment understand how to provide basic social accommodations for hearing loss. For example, he and his manager have a system worked out where Craig will repeat back what he thinks he heard his boss say, to make sure they are on the same page or to give a chance for his boss to clarify any miscommunication.

Craig spoke about his uneasy balance between not wanting to be visibly different, and therefore Othered, versus having a non-apparent disability and not receiving accommodations. He explained it this way:

“The number one, general [rule] is anybody with a handicap wants to be normal. So, you don’t wanna miss the lady behind you with the shopping cart or just somebody that comes up and speaks to you and asks you: “What time is it?” I always apologize, ‘I’m… you know’ but again the [thing about] hearing loss and hearing aids is people don’t notice that. Put me in a wheelchair then they know immediately the handicap I have, and they adjust to that. So those are the little everyday things. Other things I worry about would be an emergency command like ‘Duck!’ ‘Get out of the way!’ ‘Look out!’ that I may not hear those and that’s a concern. So, it’s hard for me to relax a lot when I go out in public because I’m just trying to be alert and look for these things… Any emergency signals, things like that, you definitely worry about that, that you’re gonna miss that.”

During one of the interviews, Craig encountered one of the issues that can come up with virtual meetings when a participant has severe hearing loss. He was traveling when the interview took place, calling in from a location that was not his usual set up. While he was completely on top of the technical requirements, such as having his older hearing aids that could connect to the computer charged and on, plus the device he used to connect his hearing aid to the computer also charged and available, he knew he still would rely to a certain degree on captions. However, he couldn’t make full use of the captions because of environmental factors outside his control. His internet connection was slow, suffering under bandwidth limitations that impact streaming audio and video. While the video lagged on the interviewer’s side, on Craig’s side the captions were delivered in fits and starts. The first part of the interviewer’s question would be transcribed, but then lag would set in and the captions wouldn’t keep up, leaving Craig waiting for the rest of the question to come through, only for the Teams call to then jump forward and deliver the captions from the end of the question. Craig didn’t mention this issue until the end of the call, evidently embarrassed and used to compensating for accessibility issues without asking for help. Thus, the technical design of the video call software, the social pressures on Craig as an older adult with hearing loss, and the environmental factors related to differential internet access in different parts of the world, all worked to create a more difficult experience for Craig than he or the interviewer could have anticipated.

One participant, Kerri, a 56-year-old documentary filmmaker with hearing loss particularly in the higher pitches, bemoaned the lack of fashion or fun associated with hearing aids, “It’s not Warby Parker. There is nothing cool about them. It’s like having crutches. There is nothing fun about having them except you can hear better.” She also explained that, unlike glasses, hearing aids need constant monitoring:

“Nothing’s easy, but I adapt. I don’t use the word easy ever when it comes to this. It’s a prosthetic device. It’s not like putting on glasses and you can see… The thing that’s most difficult is my mom talks really, really loud on the phone and my dad talks really, really soft. So, I feel like I’m monitoring it, dialing it up and down all the time like I’m on a sound mixing board.”

Noises can be loud, intense, and can even feel “invasive” coming to Kerri’s ears, so she often turns her hearing aids off. However, she says this means “I’ve had people come up to me and say, I thought you were just really, really- you know, a mean word. Just blew me off, I mean you just walked away and didn’t reply. And I’m like, ‘I don’t hear.’” Like Craig, Kerri has safety concerns. She says, “I fear [missing something important] all the time. I’m sure that’s how I’ll die. Something’s gonna hit me out of the blue that I never saw coming.” Regarding a mass shooting in a grocery store, Kerri explained,

“I shopped [in a nearby town] where the shooting was. That’s my grocery store. Now I promise I will always wear my hearing aids [in public]. I used to take them out. Why’d you wanna hear grocery store clanking and bells and whistles and people calling over the monitors? And now it’s like, ‘hey, I should be aware of my surroundings.’ So I keep them on. I used to really like it in a naïve way, and now I don’t like not being able to hear.”

In addition to changes to literal environments around hearing aid users which complicated their use of their hearing aids, especially with PCs, the social and professional environments of participants’ lives have a huge impact. For example, Kerri, as a documentary filmmaker, described an experience where she created a film for a client using headphones (she could not stream audio from her PC to her hearing aids at the time, although she later bought a device that allowed her to do so). After delivering the video, the client complained about a disruptive background noise running through the audio. However Kerri could not hear the background noise and had to ask a filmmaker friend to re-mix the audio and remove the disruptive noise for her.

Robin, who we spoke with when we conducted the diary study, spent most of her time in her home office working on creating artwork, streaming, and gaming. She had an advanced setup for digital content creation and avid gaming. One issue she discussed was with her headphones. She had tried fancy gamer-style Bluetooth headphones, but the Bluetooth signal interfered with her hearing aids. So instead, she had to get wired, closed-back headphones that she could wear over the top of her hearing aids. She explained,

“When I wear them, I can hear really well what’s going on in the computer environment. But unfortunately, I can’t hear what’s going on in the real-world environment. So, I’m constantly doing this [motions to pull one side of the headphones away from her ear] so that I can hear what’s going on around me.”

Robin felt that being able to pair her hearing aids directly with the computer, having them function as both hearing aids and headphones simultaneously, would be incredibly beneficial. She also explained that she would often miss phone calls because she didn’t hear the phone ring with her headphones on. Taking her headphones off to either answer a phone call or react to someone when they approach her, is a serious interruption to her workflow.

“The headphones that I wear are rather clunky, they’re heavy. At times, they hurt the top of my head just from the weight of them. And if I don’t get them in just the right place, sometimes they make the hearing aids squeal… So, if I would have been able to [pair my hearing aids directly to my computer], that would be so easy, and it would just be so natural to come in and sit down and get ready to work. Being able to take phone calls while I work and being able to multitask, that would be huge for my productivity.”

As an audiologist, Patricia has a different perspective on frictions associated with hearing aids. Her top challenge is navigating an ecosystem of hearing aids, connectable devices, intermediary devices, varying audio environments, and the needs of each individual patient. Outfitting a patient with the right hearing aid depends on a number of factors such as budget, lifestyle, work tasks, and which audio signals are most critical for the patient. Interestingly, the patient’s audio profile was not mentioned nearly as frequently as a patient’s lifestyle and technical abilities. After choosing the best lifestyle fit, the next step is setting up the new hearing aids and teaching the patient to use them. The amount of labor required at this stage can vary dramatically depending on the patient’s familiarity with hearing aids and general tech savviness. For an established patient, a single visit may be enough to set up new hearing aids. For others who are less familiar with hearing aids, she may schedule two or three extra appointments to check up on them and help troubleshoot. In general, Patricia encourages patients to connect directly to an audio source when possible. Sometimes this means encouraging them to purchase a new cellphone or an intermediary device. There is complexity due to the sheer number of phones and devices and lack of standardization across them. An example is that some phones are paired to hearing aids via the Bluetooth settings while others must be connected through accessibility settings. If the patient uses the wrong option, the hearing aids may still work but not correctly. Software updates can be chaotic, and Patricia counsels her patients to wait a week before updating a device.

There are other systematic barriers to patient care aside from wrangling hardware. While she worked at a hospital, Patricia had to prioritize treating a high volume of patients and there was rarely bandwidth for the technical support they required. Additionally, there could be extra constraints on brands and budgets. In her state, Medicare will not cover hearing aids and Medicaid will only cover one entry-level hearing aid every three years. The payout takes so long that Patricia cannot accept Medicaid at all.

The three research studies led the team to gain a much deeper understanding of hearing aid wearers’ experiences. Because hearing loss is an invisible disability it frequently leads to situations that are embarrassing and frustrating. Research participants continually must explain their hearing loss to people around them who are not aware they are struggling to hear. Hearing aid wearers also have to make changes to volume levels and device settings on the fly, which can be difficult mid-conversation and socially awkward. Many participants use lip reading and captions in addition to relying on their hearing aids to understand what is being said which makes using the computer, with its relatively large screen, important in business and social settings. The ability to stream audio directly from a connected device to their hearing aids has a huge impact on hearing aid wearers’ quality of life. All participants said being able to stream music, phone conversations, and other audio directly into their ears was “life-changing.” Those who could connect their hearing aids with their computer valued the ability to stream audio from their PC instead of being limited to using their phone or forced to find workarounds. The concept of seamless, fast switching among paired devices (such as the phone and the computer) was research participants’ number one priority. Connecting hearing aids more easily to the computer was their next priority. The most important finding was that if hearing aids could pair more easily with the computer, and stay connected, it would be a game-changer. Effortless, easy to understand audio of video calls, meetings, media and entertainment content delivered from the computer to hearing aids would be highly beneficial and even transformative.

RESULTS: ORGANIZATIONAL OUTCOMES

Research findings were shared with the wireless technology team, teams of business planners, and with corporate executives. Each team went from not knowing much about hearing aid use in relation to computers, and not thinking much about people who are HoH and regularly use computers, to a heightened understanding of the many pain points experienced by people who are HoH, and how important it is for them to be able to easily hear the sounds coming from their PC. In one instance, a product planner doubted that the experience of connecting hearing aids to a PC could be a big enough pain point to warrant a dedicated effort to solve it. After the research team reported their findings and showed multiple examples of the very complex and exasperating issues users were experiencing, the planner was convinced and became a champion of the project.

The technology team had been thinking mainly about the impact Bluetooth LE Audio would have on connecting headphones, and the research showed them how vital connectivity is to people who wear hearing aids. While there was interest in helping this group of computer users with a critical basic accessibility issue, there was also the sense that the need for a better experience connecting hearing devices to the computer would be amplified with this group more than with a group of users without hearing loss. This group would make an excellent set of test subjects because their needs were so acute.

The accessibility research team and the wireless technology team realized together that the new Bluetooth spec would be a perfect solution to many hearing aid connectivity problems. The new Bluetooth spec, Bluetooth LE Audio, includes a codec called LC3 that is optimized for enabling better audio experiences while using much lower power consumption than classic Bluetooth, and a feature called Auracast that allows for multiple simultaneous pairings. These make it ideal for a device that is worn all day and is vital to a person’s ability to function.

The wireless team forged a partnership with an external company that designs and manufactures hearing aids. Intel and the hearing aid company embarked on a joint effort to optimize the experience of using hearing aids with the newest Intel laptops equipped with the new Bluetooth LE Audio standard. The studies the research team conducted with people who wear hearing aids led to a greater understanding of the complex set of problems people experience when using hearing aids with a PC, and this body of knowledge was used to inform the research design of an extensive user trial of the new technology with Intel employees who wear hearing aids (see Figure 3). It was the first of what will likely be several similar partnerships and research efforts to make sure the new standard works seamlessly with all the major hearing aid products.

Technology teams are also working to integrate assistive audio in all PCs to go beyond a seamless connection and enhance the audio experience (“Intel Makes Technology More Accessible for People with Hearing Loss” 2023), using insights and opportunities revealed in the research. The ongoing goal is to make sure that a hearing aid user connecting to a PC is getting the best possible experience. Additionally, Intel is working with a non-profit (see Figure 4) to provide extremely low-cost 3D-printed hearing aids to people in developing countries (Aquino 2023), where 97% of people who could benefit from a hearing aid don’t have one because they are prohibitively expensive.

CONCLUSION: COMPLEXITY AND SIMPLICITY

In our research, we found that the Friction Model is helpful to describe the way interactions consist of numerous factors catching and dragging against one another as people and technical systems try to operate at their desired capacity. Every element in a person’s life presents a potential point of resistance when the forces of gravity or motion, in the form of desire or practical need, come up against the tendency of the system to remain static, unchanging, stuck in an inaccessible present. For the people with hearing aids that we spoke to, these points of friction were everywhere and presented sometimes incredibly difficult obstacles. But many of those friction points were not unique to HoH users. They were similar to difficulties that people with situational impairments (Sears et al. 2003) to their hearing must deal with.

Likewise, not all points of friction were negative. For example, the visible tension when Craig could not hear a colleague allowed others to step in and repeat what had been said. The loud noises of the grocery store, a personal unpleasantness for Kerri, meant that she stayed more alert to her surroundings. The friction itself was not wanted, but it allowed, in some cases, for something that was desired to happen. Let’s return for a moment to the sled on the table, being pulled by a bucket. Say a contraption, some complicated mechanical invention similar to the one shown in Figure 5, had just poured water into the bucket. If the bucket pulled the sled too quickly, it might roll right off the three toilet paper rolls making up its runners. If the bucket didn’t move at all, then the marble would not be released from underneath it to run down the track and accomplish its task. The sled would need to move with a very precise amount of friction to keep the entire system running as expected. This is the theoretical insight of the Rube Goldberg machine. While looking at just a massless sled on an infinite plane, it is easy to say that all friction hurts the capacity of the sled to move. But once you take a step back, and see the incredibly vast array of factors—bells and whistles, pulleys and levers—that make up any system as complicated as the ones in which we exist today (Linabury 2016), then we can understand that sometimes frictions help us move forward, even if in unconventional ways, as long as they are accounted for and considered. In isolation, friction hinders movement, but in a social or mechanical system a point of friction can be a point of inspiration, a jumping off point, a locus of attention to make sure the friction is properly dealt with. Friction could even inform one’s sense of self.

Thus, the complexity of the system is also its strength. The Friction Model was always intended to be, and our fieldwork demonstrated this, a dire oversimplification of the complexity of actual life with a disability. The actual ways that participants, their families, our colleagues, their and our technical systems, and the organizations we acted within all interacted with each other was unbelievably complex. The reality of how this research came to be is more complicated, likely, than any actual Rube Goldberg machine yet imagined or created (although we welcome any digital traces of particularly complex machines). It is remarkable, the ways user’s individual machines—their daily lives and workarounds and hearing needs and personal environment—all functioned alone while also being able to play small parts in the larger machine of accessibility research and technical innovation within Intel. We can see now that it is a fractal of machinery: the more closely you look the more elements are revealed and the further you pull back the more you see how far the contraption reaches. Modeling a small piece of the machine allows you to understand the larger functioning of the system, because smaller elements interact with larger elements and the friction of a single part is similar to the movement of the entire arm of the system. This means we have every reason to address those problems which affect individuals and their smaller machines the most deeply, because they give us insight into more general causes of issues. If a problem arises commonly for one type of user, it indicates certain features of the machine’s materials—their coefficient of friction as Borg et al. put it—that affect friction points arising everywhere else.

One thing that makes the machine of our world more complicated than any made by Rube Goldberg or those following in his footsteps is the fact that the larger machine is always changing and shifting. We live in a Rube Goldberg machine that is perpetually being built and rebuilt. Thus, it is very difficult to define success or progress in any straightforward or absolute way. The levers move, the force of motion is continued, but when the movement is going in “the right direction” is hard to say. Similarly with our research, there are still many moving pieces, many paths not yet complete. Organizational factors in a company like Intel are always shifting, particularly with economic pressures being what they are today. But nevertheless this research has delivered a deeper understanding of the factors, big and small, that affect users and the systems they interact with. We see now, just a little, some of the complexity at play. We and other researchers in this space can now take those intricate details of people’s lives and needs and pain points and move back towards simplicity. Not the simplicity of a limited model or a quick assumption, but the simplicity of engineering tools and following principles that are informed by the real world and real users. We cannot eliminate all friction in the system, but with these research insights we can understand when to reduce it and when to use it to our advantage and when to simply go around it.

ABOUT THE AUTHORS

Emory James Edwards recently received a Ph.D. in Informatics from the University of California, Irvine. They have collaborated with companies such as Google, Microsoft, Toyota, and Intel and are currently looking for research positions where they can utilize their expertise in qualitative research, accessibility, and user experience.

Sue Faulkner is a Research Director in the Client Computing Group at Intel Corporation. She conducts in-depth qualitative research projects to understand people’s values, motivations, and relationships with technology in order to impact product development. She has an MA in Documentary Film Production from Stanford University.

Richard Beckwith has been with Intel since 1996. He is a Research Psychologist in the Social Systems and Networks group in Intel Labs studying emerging technologies and the people on whom they emerge. His recent work has focused on AI, people with disabilities, social networks, workplace support, and surveillance technologies.

Becky Chierichetti is a UX researcher and designer exploring the human side of technology. At Intel, she collaborated with researchers, developers, and engineers to make a more accessible and inclusive world through technology. She brings curiosity, persistence, and playfulness to her work exploring the intersection of physical and digital worlds.

NOTES

The authors would like to thank Darryl Adams, Director of the Intel Accessibility Office; Ofir Degani, Intel Fellow; Mani Elangovan and Arnaud Pierre, wireless technology partners; research partners Pam Conrad and Elise Lind. They also wish to thank the research participants who generously shared their time and hearing aid experiences with the team.

1. All names used for participants in this publication are anonymized.

REFERENCES CITED

ACAT. 2023. Assistive Context Aware Toolkit (ACAT). Intel Corporation website. Accessed July 13, 2023. https://www.intel.com/content/www/us/en/developer/tools/open/acat/overview.html

Aiken, Jo, and Angela Ramer. 2020. “From the Space Station to the Sofa: Scales of Isolation at Work.” Ethnographic Praxis in Industry Conference Proceedings 2020 (1): 338–55. https://doi.org/10.1111/epic.12044.

Ash, James, Ben Anderson, Rachel Gordon, and Paul Langley. 2018. “Digital Interface Design and Power: Friction, Threshold, Transition.” Environment and Planning D: Society and Space 36 (6): 1136–53. https://doi.org/10.1177/0263775818767426.

Aquino, Steven. 2023. “Intel CEO Pat Gelsinger, Accessibility Boss Darryl Adams Talk Building Hearing Health Tech And More In Interview.” Forbes. May 19, 2023. https://www.forbes.com/sites/stevenaquino/2023/05/19/intel-ceo-pat-gelsinger-accessibility-boss-darryl-adams-talk-building-hearing-health-tech-and-more-in-interview/.

Blankinship, Erik and Richard Beckwith, R. 2001. “Tools for expressive text-to-speech markup”. In Proceedings of User Interface Software and Technology, 159-160. New York: ACM Press.

Borg, Johan, Stig Larsson, Per-Olof Ostergren, Arne H Eide. 2010. “The Friction Model–a dynamic model of functioning, disability and contextual factors and its conceptual and practical applicability”. Disability and Rehabilitation. 32(21):1790-7.

Denman, Pete, Lama Nachman, and Sai Prasad. 2016. “Designing for a user: Stephen Hawking’s UI”. In Proceedings of the 14th Participatory Design Conference: Short Papers, Interactive Exhibitions, Workshops-Volume 2. 94-95. New York: ACM Press.

Harple, Todd S., Gina Taha, Nancy Vuckovic, and Anna Wojnarowska. 2013. “Mobility Is More than a Device: Understanding Complexity in Health Care with Ethnography.” Ethnographic Praxis in Industry Conference Proceedings 2013 (1): 129–42. https://doi.org/10.1111/j.1559-8918.2013.00012.x.

Ingold, Timothy. 2010. “Footprints through the Weather-World: Walking, Breathing, Knowing.” Journal of the Royal Anthropological Institute, 16, 121-139.

Ingold, Timothy. 2012. “Thinking through Making,” Uploaded October 31, 2013, The Institute for Northern Culture, https://www.youtube.com/watch?v=Ygne72-4zyo.

“Intel Makes Technology More Accessible for People with Hearing Loss.” 2023. Intel. May 18, 2023. https://www.intel.com/content/www/us/en/newsroom/news/intel-brings-more-tech-people-with-hearing-loss.html.

Linabary, Jasmine R., Ziyu Long, Ashton Mouton, Ranjani L. Rao, and Patrice M. Buzzanell. 2016. “Rube Goldberg Salad System: Teaching Systems Theory in Communication.” Communication Teacher 30 (2): 77–81. https://doi.org/10.1080/17404622.2016.1139153.

Macmurray, John. 1957. The Self as Agent. London: Faber.

Morozov, Evgeny. 2013. To Save Everything, Click Here: The Folly of Technological Solutionism. New York: Public Affairs.

Medeiros, Joao. 2015. “How Intel Gave Stephen Hawking a Voice”. Wired. Accessed July 10, 2023. https://www.wired.com/2015/01/intel-gave-stephen-hawking-voice/

Paluch, Richard, Melanie Kreuger, Maartje M. E. Hendrikse, Giso Grimm, Volker Hohmann, Markus Meis. 2017. “Ethnographic research: The interrelation of spatial awareness, everyday life, laboratory environments, and effects of hearing aids.” Proceedings of the International Symposium on Auditory and Audiological Research 6: 39-46.

Sears, Andrew, Min Lin, Julie Jacko, and Yan Xiao. 2003. “When Computers Fade: Pervasive Computing and Situationally-Induced Impairments and Disabilities.” In HCI International, 2:1298–1302.

Thomas, Suzanne, John Sherry, Rebecca Chierichetti, Sinem Aslan, and Luminiţa‐Anda Mandache. 2022. “Beyond Zoom Fatigue: Ritual and Resilience in Remote Meetings.” Ethnographic Praxis in Industry Conference Proceedings 2022 (1): 56–73. https://doi.org/10.1111/epic.12103.

Tsing, Anna Lowenhaupt. 2011. Friction: An Ethnography of Global Connection. Princeton University Press.

Virdi, Jaipreet. 2020. Hearing happiness: deafness cures in history. Chicago: The University of Chicago Press.

Weinstein, Gregory. 2019. “Hearing Through Their Ears: Developing Inclusive Research Methods to Co‐Create with Blind Participants” Ethnographic Praxis in Industry Conference Proceedings: 88-104. https://www.epicpeople.org/hearing-through-their-ears-developing-inclusive-research-methods-co-create-blind-participants/.